| # Install on Terminal of MacOS #pip3 install -U numpy #pip3 install -U scipy #pip3 install -U pandas #pip3 install -U matplotlib #pip3 install -U statsmodels #pip3 install -U seaborn |

1_MacOS_Terminal.txt

| ########## Run Terminal on MacOS and execute ### TO UPDATE cd "YOUR_WORKING_DIRECTORY" |

Input Data files

brain_size.csv

| "";"Gender";"FSIQ";"VIQ";"PIQ";"Weight";"Height";"MRI_Count" "1";"Female";133;132;124;"118";"64.5";816932 "2";"Male";140;150;124;".";"72.5";1001121 "3";"Male";139;123;150;"143";"73.3";1038437 "4";"Male";133;129;128;"172";"68.8";965353 "5";"Female";137;132;134;"147";"65.0";951545 "6";"Female";99;90;110;"146";"69.0";928799 "7";"Female";138;136;131;"138";"64.5";991305 "8";"Female";92;90;98;"175";"66.0";854258 "9";"Male";89;93;84;"134";"66.3";904858 "10";"Male";133;114;147;"172";"68.8";955466 "11";"Female";132;129;124;"118";"64.5";833868 "12";"Male";141;150;128;"151";"70.0";1079549 "13";"Male";135;129;124;"155";"69.0";924059 "14";"Female";140;120;147;"155";"70.5";856472 "15";"Female";96;100;90;"146";"66.0";878897 "16";"Female";83;71;96;"135";"68.0";865363 "17";"Female";132;132;120;"127";"68.5";852244 "18";"Male";100;96;102;"178";"73.5";945088 "19";"Female";101;112;84;"136";"66.3";808020 "20";"Male";80;77;86;"180";"70.0";889083 "21";"Male";83;83;86;".";".";892420 "22";"Male";97;107;84;"186";"76.5";905940 "23";"Female";135;129;134;"122";"62.0";790619 "24";"Male";139;145;128;"132";"68.0";955003 "25";"Female";91;86;102;"114";"63.0";831772 "26";"Male";141;145;131;"171";"72.0";935494 "27";"Female";85;90;84;"140";"68.0";798612 "28";"Male";103;96;110;"187";"77.0";1062462 "29";"Female";77;83;72;"106";"63.0";793549 "30";"Female";130;126;124;"159";"66.5";866662 "31";"Female";133;126;132;"127";"62.5";857782 "32";"Male";144;145;137;"191";"67.0";949589 "33";"Male";103;96;110;"192";"75.5";997925 "34";"Male";90;96;86;"181";"69.0";879987 "35";"Female";83;90;81;"143";"66.5";834344 "36";"Female";133;129;128;"153";"66.5";948066 "37";"Male";140;150;124;"144";"70.5";949395 "38";"Female";88;86;94;"139";"64.5";893983 "39";"Male";81;90;74;"148";"74.0";930016 "40";"Male";89;91;89;"179";"75.5";935863 |

iris.csv

| sepal_length,sepal_width,petal_length,petal_width,name 5.1,3.5,1.4,0.2,setosa 4.9,3.0,1.4,0.2,setosa 4.7,3.2,1.3,0.2,setosa 4.6,3.1,1.5,0.2,setosa 5.0,3.6,1.4,0.2,setosa 5.4,3.9,1.7,0.4,setosa 4.6,3.4,1.4,0.3,setosa 5.0,3.4,1.5,0.2,setosa 4.4,2.9,1.4,0.2,setosa 4.9,3.1,1.5,0.1,setosa 5.4,3.7,1.5,0.2,setosa 4.8,3.4,1.6,0.2,setosa 4.8,3.0,1.4,0.1,setosa 4.3,3.0,1.1,0.1,setosa 5.8,4.0,1.2,0.2,setosa 5.7,4.4,1.5,0.4,setosa 5.4,3.9,1.3,0.4,setosa 5.1,3.5,1.4,0.3,setosa 5.7,3.8,1.7,0.3,setosa 5.1,3.8,1.5,0.3,setosa 5.4,3.4,1.7,0.2,setosa 5.1,3.7,1.5,0.4,setosa 4.6,3.6,1.0,0.2,setosa 5.1,3.3,1.7,0.5,setosa 4.8,3.4,1.9,0.2,setosa 5.0,3.0,1.6,0.2,setosa 5.0,3.4,1.6,0.4,setosa 5.2,3.5,1.5,0.2,setosa 5.2,3.4,1.4,0.2,setosa 4.7,3.2,1.6,0.2,setosa 4.8,3.1,1.6,0.2,setosa 5.4,3.4,1.5,0.4,setosa 5.2,4.1,1.5,0.1,setosa 5.5,4.2,1.4,0.2,setosa 4.9,3.1,1.5,0.1,setosa 5.0,3.2,1.2,0.2,setosa 5.5,3.5,1.3,0.2,setosa 4.9,3.1,1.5,0.1,setosa 4.4,3.0,1.3,0.2,setosa 5.1,3.4,1.5,0.2,setosa 5.0,3.5,1.3,0.3,setosa 4.5,2.3,1.3,0.3,setosa 4.4,3.2,1.3,0.2,setosa 5.0,3.5,1.6,0.6,setosa 5.1,3.8,1.9,0.4,setosa 4.8,3.0,1.4,0.3,setosa 5.1,3.8,1.6,0.2,setosa 4.6,3.2,1.4,0.2,setosa 5.3,3.7,1.5,0.2,setosa 5.0,3.3,1.4,0.2,setosa 7.0,3.2,4.7,1.4,versicolor 6.4,3.2,4.5,1.5,versicolor 6.9,3.1,4.9,1.5,versicolor 5.5,2.3,4.0,1.3,versicolor 6.5,2.8,4.6,1.5,versicolor 5.7,2.8,4.5,1.3,versicolor 6.3,3.3,4.7,1.6,versicolor 4.9,2.4,3.3,1.0,versicolor 6.6,2.9,4.6,1.3,versicolor 5.2,2.7,3.9,1.4,versicolor 5.0,2.0,3.5,1.0,versicolor 5.9,3.0,4.2,1.5,versicolor 6.0,2.2,4.0,1.0,versicolor 6.1,2.9,4.7,1.4,versicolor 5.6,2.9,3.6,1.3,versicolor 6.7,3.1,4.4,1.4,versicolor 5.6,3.0,4.5,1.5,versicolor 5.8,2.7,4.1,1.0,versicolor 6.2,2.2,4.5,1.5,versicolor 5.6,2.5,3.9,1.1,versicolor 5.9,3.2,4.8,1.8,versicolor 6.1,2.8,4.0,1.3,versicolor 6.3,2.5,4.9,1.5,versicolor 6.1,2.8,4.7,1.2,versicolor 6.4,2.9,4.3,1.3,versicolor 6.6,3.0,4.4,1.4,versicolor 6.8,2.8,4.8,1.4,versicolor 6.7,3.0,5.0,1.7,versicolor 6.0,2.9,4.5,1.5,versicolor 5.7,2.6,3.5,1.0,versicolor 5.5,2.4,3.8,1.1,versicolor 5.5,2.4,3.7,1.0,versicolor 5.8,2.7,3.9,1.2,versicolor 6.0,2.7,5.1,1.6,versicolor 5.4,3.0,4.5,1.5,versicolor 6.0,3.4,4.5,1.6,versicolor 6.7,3.1,4.7,1.5,versicolor 6.3,2.3,4.4,1.3,versicolor 5.6,3.0,4.1,1.3,versicolor 5.5,2.5,4.0,1.3,versicolor 5.5,2.6,4.4,1.2,versicolor 6.1,3.0,4.6,1.4,versicolor 5.8,2.6,4.0,1.2,versicolor 5.0,2.3,3.3,1.0,versicolor 5.6,2.7,4.2,1.3,versicolor 5.7,3.0,4.2,1.2,versicolor 5.7,2.9,4.2,1.3,versicolor 6.2,2.9,4.3,1.3,versicolor 5.1,2.5,3.0,1.1,versicolor 5.7,2.8,4.1,1.3,versicolor 6.3,3.3,6.0,2.5,virginica 5.8,2.7,5.1,1.9,virginica 7.1,3.0,5.9,2.1,virginica 6.3,2.9,5.6,1.8,virginica 6.5,3.0,5.8,2.2,virginica 7.6,3.0,6.6,2.1,virginica 4.9,2.5,4.5,1.7,virginica 7.3,2.9,6.3,1.8,virginica 6.7,2.5,5.8,1.8,virginica 7.2,3.6,6.1,2.5,virginica 6.5,3.2,5.1,2.0,virginica 6.4,2.7,5.3,1.9,virginica 6.8,3.0,5.5,2.1,virginica 5.7,2.5,5.0,2.0,virginica 5.8,2.8,5.1,2.4,virginica 6.4,3.2,5.3,2.3,virginica 6.5,3.0,5.5,1.8,virginica 7.7,3.8,6.7,2.2,virginica 7.7,2.6,6.9,2.3,virginica 6.0,2.2,5.0,1.5,virginica 6.9,3.2,5.7,2.3,virginica 5.6,2.8,4.9,2.0,virginica 7.7,2.8,6.7,2.0,virginica 6.3,2.7,4.9,1.8,virginica 6.7,3.3,5.7,2.1,virginica 7.2,3.2,6.0,1.8,virginica 6.2,2.8,4.8,1.8,virginica 6.1,3.0,4.9,1.8,virginica 6.4,2.8,5.6,2.1,virginica 7.2,3.0,5.8,1.6,virginica 7.4,2.8,6.1,1.9,virginica 7.9,3.8,6.4,2.0,virginica 6.4,2.8,5.6,2.2,virginica 6.3,2.8,5.1,1.5,virginica 6.1,2.6,5.6,1.4,virginica 7.7,3.0,6.1,2.3,virginica 6.3,3.4,5.6,2.4,virginica 6.4,3.1,5.5,1.8,virginica 6.0,3.0,4.8,1.8,virginica 6.9,3.1,5.4,2.1,virginica 6.7,3.1,5.6,2.4,virginica 6.9,3.1,5.1,2.3,virginica 5.8,2.7,5.1,1.9,virginica 6.8,3.2,5.9,2.3,virginica 6.7,3.3,5.7,2.5,virginica 6.7,3.0,5.2,2.3,virginica 6.3,2.5,5.0,1.9,virginica 6.5,3.0,5.2,2.0,virginica 6.2,3.4,5.4,2.3,virginica 5.9,3.0,5.1,1.8,virginica |

CPS_85_Wages.csv

| EDUCATION,SOUTH,SEX,EXPERIENCE,UNION,WAGE,AGE,RACE,OCCUPATION,SECTOR,MARR 8,0,1,21,0,5.1,35,2,6,1,1 9,0,1,42,0,4.95,57,3,6,1,1 12,0,0,1,0,6.67,19,3,6,1,0 12,0,0,4,0,4,22,3,6,0,0 12,0,0,17,0,7.5,35,3,6,0,1 13,0,0,9,1,13.07,28,3,6,0,0 10,1,0,27,0,4.45,43,3,6,0,0 12,0,0,9,0,19.47,27,3,6,0,0 16,0,0,11,0,13.28,33,3,6,1,1 12,0,0,9,0,8.75,27,3,6,0,0 12,0,0,17,1,11.35,35,3,6,0,1 12,0,0,19,1,11.5,37,3,6,1,0 8,1,0,27,0,6.5,41,3,6,0,1 9,1,0,30,1,6.25,45,3,6,0,0 9,1,0,29,0,19.98,44,3,6,0,1 12,0,0,37,0,7.3,55,3,6,2,1 7,1,0,44,0,8,57,3,6,0,1 12,0,0,26,1,22.2,44,3,6,1,1 11,0,0,16,0,3.65,33,3,6,0,0 12,0,0,33,0,20.55,51,3,6,0,1 12,0,1,16,1,5.71,34,3,6,1,1 7,0,0,42,1,7,55,1,6,1,1 12,0,0,9,0,3.75,27,3,6,0,0 11,1,0,14,0,4.5,31,1,6,0,1 12,0,0,23,0,9.56,41,3,6,0,1 6,1,0,45,0,5.75,57,3,6,1,1 12,0,0,8,0,9.36,26,3,6,1,1 10,0,0,30,0,6.5,46,3,6,0,1 12,0,1,8,0,3.35,26,3,6,1,1 12,0,0,8,0,4.75,26,3,6,0,1 14,0,0,13,0,8.9,33,3,6,0,0 12,1,1,46,0,4,64,3,6,0,0 8,0,0,19,0,4.7,33,3,6,0,1 17,1,1,1,0,5,24,3,6,0,0 12,0,0,19,0,9.25,37,3,6,1,0 12,0,0,36,0,10.67,54,1,6,0,0 12,1,0,20,0,7.61,38,1,6,2,1 12,0,0,35,1,10,53,1,6,2,1 12,0,0,3,0,7.5,21,3,6,0,0 14,1,0,10,0,12.2,30,3,6,1,1 12,0,0,0,0,3.35,18,3,6,0,0 14,1,0,14,1,11,34,3,6,1,1 12,0,0,14,0,12,32,3,6,1,1 9,0,1,16,0,4.85,31,3,6,1,1 13,1,0,8,0,4.3,27,3,6,2,0 7,1,1,15,0,6,28,3,6,1,1 16,0,0,12,0,15,34,3,6,1,1 10,1,0,13,0,4.85,29,3,6,0,0 8,0,0,33,1,9,47,3,6,0,1 12,0,0,9,0,6.36,27,3,6,1,1 12,0,0,7,0,9.15,25,3,6,0,1 16,0,0,13,1,11,35,3,6,1,1 12,0,1,7,0,4.5,25,3,6,1,1 12,0,1,16,0,4.8,34,3,6,1,1 13,0,0,0,0,4,19,3,6,0,0 12,0,1,11,0,5.5,29,3,6,1,0 13,0,0,17,0,8.4,36,3,6,1,0 10,0,0,13,0,6.75,29,3,6,1,1 12,0,0,22,1,10,40,1,6,1,0 12,0,1,28,0,5,46,3,6,1,1 11,0,0,17,0,6.5,34,3,6,0,0 12,0,0,24,1,10.75,42,3,6,2,1 3,1,0,55,0,7,64,2,6,1,1 12,1,0,3,0,11.43,21,3,6,2,0 12,0,0,6,1,4,24,1,6,1,0 10,0,0,27,0,9,43,3,6,2,1 12,1,0,19,1,13,37,1,6,1,1 12,0,0,19,1,12.22,37,3,6,2,1 12,0,1,38,0,6.28,56,3,6,1,1 10,1,0,41,1,6.75,57,1,6,1,1 11,1,0,3,0,3.35,20,1,6,1,0 14,0,0,20,1,16,40,3,6,0,1 10,0,0,15,0,5.25,31,3,6,0,1 8,1,0,8,0,3.5,22,2,6,1,1 8,1,1,39,0,4.22,53,3,6,1,1 6,0,1,43,1,3,55,2,6,1,1 11,1,1,25,1,4,42,3,6,1,1 12,0,0,11,1,10,29,3,6,0,1 12,0,0,12,0,5,30,1,6,0,1 12,1,0,35,1,16,53,3,6,1,1 14,0,0,14,0,13.98,34,3,6,0,0 12,0,0,16,1,13.26,34,3,6,0,1 10,0,1,44,1,6.1,60,3,6,1,0 16,1,1,13,0,3.75,35,3,6,0,0 13,0,0,8,1,9,27,1,6,1,0 12,0,0,13,0,9.45,31,3,6,1,0 11,0,0,18,1,5.5,35,3,6,0,1 12,0,1,18,0,8.93,36,3,6,0,1 12,1,1,6,0,6.25,24,3,6,0,0 11,1,0,37,1,9.75,54,3,6,1,1 12,1,0,2,0,6.73,20,3,6,1,1 12,0,0,23,0,7.78,41,3,6,1,1 12,0,0,1,0,2.85,19,3,6,0,0 12,1,1,10,0,3.35,28,1,6,1,1 12,0,0,23,0,19.98,41,3,6,1,1 12,0,0,8,1,8.5,26,1,6,0,1 15,0,1,9,0,9.75,30,3,6,1,1 12,0,0,33,1,15,51,3,6,2,1 12,0,1,19,0,8,37,3,6,1,1 13,0,0,14,0,11.25,33,3,6,0,1 11,0,0,13,1,14,30,3,6,0,1 10,0,0,12,0,10,28,3,6,2,1 12,0,0,8,0,6.5,26,3,6,0,0 12,0,0,23,0,9.83,41,3,6,1,1 14,0,1,13,0,18.5,33,3,6,1,0 12,1,0,9,0,12.5,27,3,6,0,1 14,0,0,21,1,26,41,3,6,0,1 5,1,0,44,0,14,55,3,6,2,1 12,0,0,4,1,10.5,22,3,6,0,1 8,0,0,42,0,11,56,3,6,1,1 13,0,0,10,1,12.47,29,3,6,0,1 12,0,0,11,0,12.5,29,3,6,2,0 12,0,0,40,1,15,58,3,6,2,1 12,0,0,8,0,6,26,3,6,2,0 11,1,0,29,0,9.5,46,3,6,2,1 16,0,0,3,1,5,25,3,6,0,0 11,0,0,11,0,3.75,28,3,6,2,0 12,0,0,12,1,12.57,30,3,6,0,1 8,0,1,22,0,6.88,36,2,6,0,1 12,0,0,12,0,5.5,30,3,6,0,1 12,0,0,7,1,7,25,3,6,0,1 12,0,1,15,0,4.5,33,3,6,1,0 12,0,0,28,0,6.5,46,3,6,0,1 12,1,0,20,1,12,38,3,6,1,1 12,1,0,6,0,5,24,3,6,2,0 12,1,0,5,0,6.5,23,3,6,1,0 9,1,1,30,0,6.8,45,3,6,1,1 13,0,0,18,0,8.75,37,3,6,0,1 12,1,1,6,0,3.75,24,1,6,1,1 12,1,0,16,0,4.5,34,2,6,0,0 12,1,0,1,1,6,19,2,6,0,0 12,0,0,3,0,5.5,21,3,6,1,0 12,0,0,8,0,13,26,3,6,0,1 14,0,0,2,0,5.65,22,3,6,1,0 9,0,0,16,0,4.8,31,1,6,1,0 10,1,0,9,0,7,25,3,6,2,1 12,0,0,2,0,5.25,20,3,6,0,0 7,1,0,43,0,3.35,56,3,6,1,1 9,0,0,38,0,8.5,53,3,6,1,1 12,0,0,9,0,6,27,3,6,0,1 12,1,0,12,0,6.75,30,3,6,0,1 12,0,0,18,0,8.89,36,3,6,1,1 11,0,0,15,1,14.21,32,3,6,1,0 11,1,0,28,1,10.78,45,1,6,2,1 10,1,0,27,1,8.9,43,3,6,2,1 12,1,0,38,0,7.5,56,3,6,0,1 12,0,1,3,0,4.5,21,3,6,1,0 12,0,0,41,1,11.25,59,3,6,0,1 12,1,0,16,1,13.45,34,3,6,0,1 13,1,0,7,0,6,26,3,6,1,1 6,1,1,33,0,4.62,45,1,6,1,0 14,0,0,25,0,10.58,45,3,6,1,1 12,1,0,5,0,5,23,3,6,0,1 14,1,0,17,0,8.2,37,1,6,0,0 12,1,0,1,0,6.25,19,3,6,0,0 12,0,0,13,0,8.5,31,3,6,1,1 16,0,0,18,0,24.98,40,3,1,0,1 14,1,0,21,0,16.65,41,3,1,0,1 14,0,0,2,0,6.25,22,3,1,0,0 12,1,1,4,0,4.55,22,2,1,0,0 12,1,1,30,0,11.25,48,2,1,0,1 13,0,0,32,0,21.25,51,3,1,0,0 17,0,1,13,0,12.65,36,3,1,0,1 12,0,0,17,0,7.5,35,3,1,0,0 14,0,1,26,0,10.25,46,3,1,0,1 16,0,0,9,0,3.35,31,3,1,0,0 16,0,0,8,0,13.45,30,1,1,0,0 15,0,0,1,1,4.84,22,3,1,0,1 17,1,0,32,0,26.29,55,3,1,0,1 12,0,1,24,0,6.58,42,3,1,0,1 14,0,1,1,0,44.5,21,3,1,0,0 12,0,0,42,0,15,60,3,1,1,1 16,0,1,3,0,11.25,25,1,1,1,0 12,0,1,32,0,7,50,3,1,0,1 14,0,0,22,0,10,42,1,1,0,0 16,0,0,18,0,14.53,40,3,1,0,1 18,0,1,19,0,20,43,3,1,0,1 15,0,0,12,0,22.5,33,3,1,0,1 12,0,1,42,0,3.64,60,3,1,0,1 12,1,0,34,0,10.62,52,3,1,0,1 18,0,0,29,0,24.98,53,3,1,0,1 16,1,0,8,0,6,30,3,1,0,0 18,0,0,13,0,19,37,3,1,1,0 16,0,0,10,0,13.2,32,3,1,0,0 16,0,0,22,0,22.5,44,3,1,0,1 16,1,0,10,0,15,32,3,1,0,1 17,0,1,15,0,6.88,38,3,1,0,1 12,0,0,26,0,11.84,44,3,1,0,1 14,0,0,16,0,16.14,36,3,1,0,0 18,0,1,14,0,13.95,38,3,1,0,1 12,0,1,38,0,13.16,56,3,1,0,1 12,1,0,14,0,5.3,32,1,1,0,1 12,0,1,7,0,4.5,25,3,1,0,1 18,1,1,13,0,10,37,3,1,0,0 10,0,0,20,0,10,36,3,1,0,1 16,0,0,7,1,10,29,2,1,0,1 16,0,1,26,0,9.37,48,3,1,0,1 16,0,0,14,0,5.8,36,3,1,0,1 13,0,0,36,0,17.86,55,3,1,0,0 12,0,0,24,0,1,42,3,1,0,1 14,1,0,41,0,8.8,61,3,1,0,1 16,0,0,7,0,9,29,1,1,0,1 17,1,0,14,0,18.16,37,3,1,0,0 12,1,1,1,0,7.81,19,3,1,0,0 16,0,1,6,0,10.62,28,3,1,1,1 12,0,1,3,0,4.5,21,3,1,0,1 15,0,0,31,0,17.25,52,3,1,0,1 13,0,1,14,0,10.5,33,3,1,1,1 14,0,1,13,0,9.22,33,3,1,0,1 16,0,0,26,1,15,48,1,1,1,1 18,0,0,14,0,22.5,38,3,1,0,1 13,0,1,33,0,4.55,52,3,2,0,1 12,0,0,16,0,9,34,3,2,0,1 18,0,0,10,0,13.33,34,3,2,0,1 14,0,0,22,0,15,42,3,2,0,0 14,0,0,2,0,7.5,22,3,2,0,0 12,1,1,29,0,4.25,47,3,2,0,1 12,0,0,43,0,12.5,61,3,2,1,1 12,0,1,5,0,5.13,23,3,2,0,1 16,1,1,14,0,3.35,36,1,2,0,1 12,1,0,28,0,11.11,46,3,2,0,1 11,1,1,25,0,3.84,42,1,2,0,1 12,0,1,45,0,6.4,63,3,2,0,1 14,1,0,5,0,5.56,25,3,2,0,0 12,1,0,20,0,10,38,3,2,1,1 16,0,1,6,0,5.65,28,3,2,0,1 16,0,0,16,0,11.5,38,3,2,0,1 11,0,1,33,0,3.5,50,3,2,0,1 13,1,1,2,0,3.35,21,3,2,0,1 12,1,1,10,0,4.75,28,3,2,0,0 14,1,0,44,0,19.98,64,3,2,0,1 14,1,1,6,0,3.5,26,3,2,0,1 12,0,1,15,0,4,33,3,2,0,0 12,0,0,5,0,7,23,3,2,0,1 13,0,1,4,0,6.25,23,3,2,1,1 14,0,0,14,0,4.5,34,3,2,0,1 14,0,1,32,0,14.29,52,3,2,0,1 12,0,1,14,0,5,32,3,2,0,1 14,0,0,21,0,13.75,41,3,2,0,1 12,0,0,43,1,13.71,61,3,2,0,1 12,1,1,27,0,7.5,45,1,2,0,1 12,0,1,4,0,3.8,22,3,2,0,0 14,0,0,0,0,5,20,2,2,0,0 12,1,0,32,0,9.42,50,3,2,0,1 12,0,0,20,0,5.5,38,3,2,0,1 15,1,0,4,0,3.75,25,3,2,0,0 12,0,0,34,0,3.5,52,3,2,0,1 13,0,0,5,0,5.8,24,3,2,0,0 17,0,0,13,0,12,36,3,2,1,1 14,0,1,17,0,5,37,2,3,0,1 13,1,1,10,0,8.75,29,3,3,0,1 16,0,1,7,0,10,29,3,3,0,1 12,0,1,25,0,8.5,43,3,3,0,0 12,0,1,18,0,8.63,36,1,3,0,1 16,0,1,27,0,9,49,3,3,1,1 16,0,1,2,0,5.5,24,3,3,0,0 13,0,0,13,0,11.11,32,3,3,0,1 14,0,1,24,0,10,44,3,3,0,0 18,1,1,13,0,5.2,37,2,3,0,1 14,0,1,15,1,8,35,3,3,0,0 12,1,1,12,0,3.56,30,2,3,0,0 12,0,1,24,0,5.2,42,3,3,0,1 12,0,1,43,0,11.67,61,3,3,2,1 12,0,1,13,0,11.32,31,3,3,1,1 12,1,1,16,0,7.5,34,3,3,0,1 11,0,1,24,0,5.5,41,3,3,0,1 16,1,1,4,0,5,26,3,3,0,1 12,0,1,24,0,7.75,42,3,3,0,1 12,0,1,45,0,5.25,63,3,3,0,1 12,0,0,20,1,9,38,3,3,0,1 12,0,1,38,0,9.65,56,3,3,0,1 18,1,0,10,0,5.21,34,3,3,0,1 11,0,1,16,0,7,33,1,3,0,1 12,1,1,32,0,12.16,50,1,3,0,1 16,1,1,2,0,5.25,24,3,3,0,0 13,1,1,28,0,10.32,47,3,3,0,0 16,0,0,3,0,3.35,25,1,3,0,0 13,0,1,8,1,7.7,27,3,3,0,0 12,0,1,44,0,9.17,62,3,3,1,1 12,1,0,12,0,8.43,30,3,3,0,1 12,1,0,8,0,4,26,1,3,0,1 12,0,1,4,0,4.13,22,3,3,0,1 12,1,1,28,0,3,46,3,3,0,1 13,1,1,0,0,4.25,19,3,3,0,0 14,1,0,1,0,7.53,21,3,3,0,0 14,0,1,12,0,10.53,32,3,3,1,1 12,0,1,39,0,5,57,3,3,0,1 12,0,1,24,0,15.03,42,3,3,0,1 17,0,1,32,0,11.25,55,1,3,0,1 16,0,0,4,0,6.25,26,1,3,0,0 12,0,1,25,0,3.5,43,1,3,0,0 12,0,0,8,0,6.85,26,1,3,0,0 13,0,1,16,0,12.5,35,3,3,0,1 12,1,0,5,0,12,23,3,3,0,0 13,0,0,31,0,6,50,3,3,0,0 12,0,1,25,0,9.5,43,3,3,0,0 12,0,1,15,0,4.1,33,3,3,0,1 14,1,1,15,0,10.43,35,3,3,0,1 12,0,1,0,0,5,18,3,3,0,0 12,0,0,19,0,7.69,37,3,3,0,1 12,0,1,21,0,5.5,39,1,3,0,0 12,0,1,6,0,6.4,24,3,3,0,0 12,0,1,14,1,12.5,32,3,3,0,1 13,0,1,30,0,6.25,49,3,3,0,1 12,0,1,8,0,8,26,3,3,0,0 9,0,0,33,1,9.6,48,3,3,0,0 13,0,0,16,0,9.1,35,2,3,0,0 12,1,1,20,0,7.5,38,3,3,0,0 13,1,1,6,0,5,25,3,3,0,1 12,0,1,10,1,7,28,3,3,0,1 13,1,1,1,0,3.55,20,3,3,0,0 12,1,0,2,0,8.5,20,1,3,0,0 13,1,1,0,0,4.5,19,3,3,0,0 16,0,0,17,0,7.88,39,1,3,0,1 12,0,1,8,0,5.25,26,3,3,0,0 12,1,0,4,0,5,22,3,3,0,0 12,0,1,15,0,9.33,33,3,3,0,0 12,0,1,29,0,10.5,47,3,3,0,1 12,1,1,23,0,7.5,41,1,3,0,1 12,1,1,39,0,9.5,57,3,3,0,1 12,1,1,14,0,9.6,32,3,3,0,1 17,1,1,6,0,5.87,29,1,3,0,0 14,1,0,12,1,11.02,32,3,3,0,1 12,1,1,26,0,5,44,3,3,0,0 14,0,1,32,0,5.62,52,3,3,0,1 15,0,1,6,0,12.5,27,3,3,0,1 12,0,1,40,0,10.81,58,3,3,0,1 12,0,1,18,0,5.4,36,3,3,1,1 11,0,1,12,0,7,29,3,3,0,0 12,1,1,36,0,4.59,54,3,3,2,1 12,0,1,19,0,6,37,3,3,0,1 16,0,1,42,0,11.71,64,3,3,1,0 13,0,1,2,0,5.62,21,2,3,0,1 12,0,1,33,0,5.5,51,3,3,0,1 12,1,1,14,0,4.85,32,3,3,0,1 12,0,0,22,0,6.75,40,3,3,0,0 12,0,1,20,0,4.25,38,3,3,0,1 12,0,1,15,0,5.75,33,3,3,0,1 12,0,0,35,0,3.5,53,3,3,0,1 12,0,1,7,0,3.35,25,3,3,0,1 12,0,1,45,0,10.62,63,3,3,1,0 12,0,1,9,0,8,27,3,3,0,0 12,1,1,2,0,4.75,20,3,3,0,1 17,1,0,3,0,8.5,26,3,3,0,0 14,0,1,19,1,8.85,39,1,3,0,1 12,1,1,14,0,8,32,3,3,0,1 4,0,0,54,0,6,64,3,4,0,1 14,0,0,17,0,7.14,37,3,4,0,1 8,0,1,29,0,3.4,43,1,4,0,1 15,1,1,26,0,6,47,3,4,0,0 2,0,0,16,0,3.75,24,2,4,0,0 8,0,1,29,0,8.89,43,1,4,0,0 11,0,1,20,0,4.35,37,3,4,0,1 10,1,1,38,0,13.1,54,1,4,0,1 8,1,1,37,0,4.35,51,1,4,0,1 9,0,0,48,0,3.5,63,3,4,0,0 12,0,1,16,0,3.8,34,3,4,0,0 8,0,1,38,0,5.26,52,3,4,0,1 14,0,0,0,0,3.35,20,1,4,0,0 12,0,0,14,1,16.26,32,1,4,0,0 12,0,1,2,0,4.25,20,3,4,0,1 16,0,0,21,0,4.5,43,3,4,0,1 13,0,1,15,0,8,34,3,4,0,1 16,0,1,20,0,4,42,3,4,0,0 14,0,1,12,0,7.96,32,3,4,0,1 12,1,0,7,0,4,25,2,4,0,0 11,0,0,4,0,4.15,21,3,4,0,1 13,1,0,9,0,5.95,28,3,4,0,1 12,1,1,43,0,3.6,61,2,4,0,1 10,1,0,19,0,8.75,35,3,4,0,0 8,0,1,49,0,3.4,63,3,4,0,0 12,0,1,38,0,4.28,56,3,4,0,1 12,0,1,13,0,5.35,31,3,4,0,1 12,0,1,14,0,5,32,3,4,0,1 12,0,0,20,0,7.65,38,3,4,0,0 12,0,1,7,0,6.94,25,3,4,0,0 12,0,1,9,1,7.5,27,3,4,1,1 12,0,1,6,0,3.6,24,3,4,0,0 12,1,1,5,0,1.75,23,3,4,0,1 13,1,1,1,0,3.45,20,1,4,0,0 14,0,0,22,1,9.63,42,3,4,0,1 12,0,1,24,0,8.49,42,3,4,0,1 12,0,1,15,1,8.99,33,3,4,0,0 11,1,1,8,0,3.65,25,3,4,0,1 11,1,1,17,0,3.5,34,3,4,0,1 12,1,0,2,0,3.43,20,1,4,0,0 12,1,0,20,0,5.5,38,3,4,0,1 12,0,0,26,1,6.93,44,3,4,0,1 10,1,1,37,0,3.51,53,1,4,0,1 12,0,1,41,0,3.75,59,3,4,0,0 12,0,1,27,0,4.17,45,3,4,0,1 12,0,1,5,1,9.57,23,3,4,0,1 14,0,0,16,0,14.67,36,1,4,0,1 14,0,1,19,0,12.5,39,3,4,0,1 12,0,0,10,0,5.5,28,3,4,0,1 13,1,0,1,1,5.15,20,3,4,0,0 12,0,1,43,1,8,61,1,4,0,1 13,0,0,3,0,5.83,22,1,4,0,0 12,0,1,0,0,3.35,18,3,4,0,0 12,1,1,26,0,7,44,3,4,0,1 10,0,1,25,1,10,41,3,4,0,1 12,0,1,15,0,8,33,3,4,0,1 14,1,1,10,0,6.88,30,3,4,0,0 11,0,1,45,1,5.55,62,3,4,0,0 11,0,0,3,0,7.5,20,1,4,0,0 8,0,0,47,1,8.93,61,2,4,0,1 16,0,1,6,0,9,28,1,4,0,1 10,1,1,33,0,3.5,49,3,4,0,0 16,0,0,3,0,5.77,25,3,4,1,0 14,0,0,4,1,25,24,2,4,0,0 14,0,0,34,1,6.85,54,1,4,0,1 11,1,0,39,0,6.5,56,3,4,0,1 12,1,1,17,0,3.75,35,3,4,0,1 9,0,0,47,1,3.5,62,3,4,0,1 11,0,0,2,0,4.5,19,3,4,0,0 13,1,0,0,0,2.01,19,3,4,0,0 14,0,1,24,0,4.17,44,3,4,0,0 12,0,0,25,1,13,43,1,4,0,1 14,0,1,6,0,3.98,26,3,4,0,0 12,0,1,10,0,7.5,28,3,4,0,0 12,0,1,33,0,13.12,51,1,4,0,1 12,0,0,12,0,4,30,3,4,0,0 12,1,1,9,0,3.95,27,3,4,0,1 11,1,0,18,1,13,35,3,4,0,1 12,0,0,10,0,9,28,3,4,0,1 8,1,1,45,0,4.55,59,3,4,0,0 9,0,1,46,1,9.5,61,3,4,0,1 7,1,0,14,0,4.5,27,2,4,0,1 11,0,1,36,0,8.75,53,3,4,0,0 13,0,0,34,1,10,53,3,5,2,1 18,0,0,15,0,18,39,3,5,0,1 17,0,0,31,0,24.98,54,3,5,1,1 16,0,1,6,0,12.05,28,3,5,1,0 14,1,0,15,0,22,35,3,5,0,1 12,0,0,30,0,8.75,48,3,5,0,1 18,0,0,8,0,22.2,32,3,5,0,1 18,0,0,5,0,17.25,29,3,5,1,1 17,0,1,3,1,6,26,3,5,0,0 13,1,0,17,0,8.06,36,3,5,0,1 16,0,0,5,1,9.24,27,1,5,1,1 14,0,1,10,0,12,30,3,5,0,1 15,0,1,33,0,10.61,54,3,5,0,0 18,0,0,3,0,5.71,27,3,5,0,1 16,0,1,0,0,10,18,3,5,0,0 16,1,0,13,0,17.5,35,1,5,0,1 18,0,0,12,0,15,36,3,5,0,1 16,0,1,6,0,7.78,28,3,5,0,1 17,0,0,7,0,7.8,30,3,5,0,1 16,1,0,14,1,10,36,3,5,0,1 17,0,1,5,0,24.98,28,3,5,0,0 15,1,1,10,0,10.28,31,3,5,0,1 18,0,1,11,0,15,35,3,5,0,1 17,0,1,24,0,12,47,3,5,0,1 16,0,0,9,0,10.58,31,3,5,1,0 18,1,0,12,0,5.85,36,3,5,0,1 18,0,0,19,0,11.22,43,3,5,0,1 14,0,1,14,0,8.56,34,3,5,0,1 16,0,1,17,0,13.89,39,3,5,1,0 18,1,0,7,0,5.71,31,3,5,0,0 18,0,0,7,0,15.79,31,3,5,0,1 16,0,1,22,0,7.5,44,3,5,0,1 12,0,1,28,0,11.25,46,3,5,0,1 16,0,1,16,0,6.15,38,3,5,0,0 16,1,0,16,0,13.45,38,1,5,0,0 16,0,1,7,0,6.25,29,3,5,0,1 12,0,1,11,0,6.5,29,3,5,0,0 12,0,1,11,0,12,29,3,5,0,1 12,0,1,16,0,8.5,34,3,5,0,0 18,0,0,33,1,8,57,3,5,0,0 12,1,1,21,0,5.75,39,3,5,0,1 16,0,0,4,0,15.73,26,3,5,1,1 15,0,0,13,0,9.86,34,3,5,0,1 18,0,0,14,1,13.51,38,3,5,0,1 16,0,1,10,0,5.4,32,3,5,0,1 18,1,0,14,0,6.25,38,3,5,0,1 16,1,0,29,0,5.5,51,3,5,0,1 12,0,0,4,0,5,22,2,5,0,0 18,0,0,27,0,6.25,51,1,5,0,1 12,0,0,3,0,5.75,21,3,5,0,1 16,1,0,14,1,20.5,36,3,5,0,1 14,0,0,0,0,5,20,3,5,2,1 18,0,0,33,0,7,57,3,5,0,1 16,1,0,38,0,18,60,3,5,0,1 18,0,1,18,1,12,42,3,5,0,1 17,0,0,3,0,20.4,26,3,5,1,0 18,0,1,40,0,22.2,64,3,5,0,0 14,0,0,19,0,16.42,39,3,5,1,0 14,0,1,4,0,8.63,24,3,5,0,0 16,0,1,11,0,19.38,33,3,5,0,1 16,0,1,16,0,14,38,3,5,0,1 14,0,0,22,0,10,42,3,5,0,1 17,0,1,13,1,15.95,36,3,5,0,0 16,1,1,28,1,20,50,3,5,0,1 16,0,1,10,0,10,32,3,5,0,1 16,1,1,5,0,24.98,27,3,5,0,0 15,0,0,5,0,11.25,26,3,5,0,0 18,0,1,37,0,22.83,61,3,5,1,0 17,0,1,26,1,10.2,49,3,5,0,1 16,1,1,4,0,10,26,3,5,0,1 18,0,1,31,1,14,55,3,5,0,0 17,0,1,13,1,12.5,36,3,5,0,1 12,0,1,42,0,5.79,60,3,5,0,1 17,0,0,18,0,24.98,41,2,5,0,1 12,0,1,3,0,4.35,21,3,5,0,1 17,0,1,10,0,11.25,33,3,5,0,0 16,0,1,10,1,6.67,32,3,5,0,0 16,0,1,17,0,8,39,2,5,0,1 18,0,0,7,0,18.16,31,3,5,0,1 16,0,1,14,0,12,36,3,5,0,1 16,0,1,22,1,8.89,44,3,5,0,1 17,0,1,14,0,9.5,37,3,5,0,1 16,0,0,11,0,13.65,33,3,5,0,1 18,0,0,23,1,12,47,3,5,0,1 12,0,0,39,1,15,57,3,5,0,1 16,0,0,15,0,12.67,37,3,5,0,1 14,0,1,15,0,7.38,35,2,5,0,0 16,0,0,10,0,15.56,32,3,5,0,0 12,1,1,25,0,7.45,43,3,5,0,0 14,0,1,12,0,6.25,32,3,5,0,1 16,1,1,7,0,6.25,29,2,5,0,1 17,0,0,7,1,9.37,30,3,5,0,1 16,0,0,17,0,22.5,39,3,5,1,1 16,0,0,10,1,7.5,32,3,5,0,1 17,1,0,2,0,7,25,3,5,0,1 9,1,1,34,1,5.75,49,1,5,0,1 15,0,1,11,0,7.67,32,3,5,0,1 15,0,0,10,0,12.5,31,3,5,0,0 12,1,0,12,0,16,30,3,5,0,1 16,0,1,6,1,11.79,28,3,5,0,0 18,0,0,5,0,11.36,29,3,5,0,0 12,0,1,33,0,6.1,51,1,5,0,1 17,0,1,25,1,23.25,48,1,5,0,1 12,1,0,13,1,19.88,31,3,5,0,1 16,0,0,33,0,15.38,55,3,5,1,1 |

wages.txt

| Determinants of Wages from the 1985 Current Population Survey Summary: The Current Population Survey (CPS) is used to supplement census information between census years. These data consist of a random sample of 534 persons from the CPS, with information on wages and other characteristics of the workers, including sex, number of years of education, years of work experience, occupational status, region of residence and union membership. We wish to determine (i) whether wages are related to these characteristics and (ii) whether there is a gender gap in wages. Based on residual plots, wages were log-transformed to stabilize the variance. Age and work experience were almost perfectly correlated (r=.98). Multiple regression of log wages against sex, age, years of education, work experience, union membership, southern residence, and occupational status showed that these covariates were related to wages (pooled F test, p < .0001). The effect of age was not significant after controlling for experience. Standardized residual plots showed no patterns, except for one large outlier with lower wages than expected. This was a male, with 22 years of experience and 12 years of education, in a management position, who lived in the north and was not a union member. Removing this person from the analysis did not substantially change the results, so that the final model included the entire sample. Adjusting for all other variables in the model, females earned 81% (75%, 88%) the wages of males (p < .0001). Wages increased 41% (28%, 56%) for every 5 additional years of education (p < .0001). They increased by 11% (7%, 14%) for every additional 10 years of experience (p < .0001). Union members were paid 23% (12%, 36%) more than non-union members (p < .0001). Northerns were paid 11% (2%, 20%) more than southerns (p =.016). Management and professional positions were paid most, and service and clerical positions were paid least (pooled F-test, p < .0001). Overall variance explained was R2 = .35. In summary, many factors describe the variations in wages: occupational status, years of experience, years of education, sex, union membership and region of residence. However, despite adjustment for all factors that were available, there still appeared to be a gender gap in wages. There is no readily available explanation for this gender gap. Authorization: Public Domain Reference: Berndt, ER. The Practice of Econometrics. 1991. NY: Addison-Wesley. Description: The datafile contains 534 observations on 11 variables sampled from the Current Population Survey of 1985. This data set demonstrates multiple regression, confounding, transformations, multicollinearity, categorical variables, ANOVA, pooled tests of significance, interactions and model building strategies. Variable names in order from left to right: EDUCATION: Number of years of education. SOUTH: Indicator variable for Southern Region (1=Person lives in South, 0=Person lives elsewhere). SEX: Indicator variable for sex (1=Female, 0=Male). EXPERIENCE: Number of years of work experience. UNION: Indicator variable for union membership (1=Union member, 0=Not union member). WAGE: Wage (dollars per hour). AGE: Age (years). RACE: Race (1=Other, 2=Hispanic, 3=White). OCCUPATION: Occupational category (1=Management, 2=Sales, 3=Clerical, 4=Service, 5=Professional, 6=Other). SECTOR: Sector (0=Other, 1=Manufacturing, 2=Construction). MARR: Marital Status (0=Unmarried, 1=Married) 8 0 1 21 0 5.1 35 2 6 1 1 9 0 1 42 0 4.95 57 3 6 1 1 12 0 0 1 0 6.67 19 3 6 1 0 12 0 0 4 0 4 22 3 6 0 0 12 0 0 17 0 7.5 35 3 6 0 1 13 0 0 9 1 13.07 28 3 6 0 0 10 1 0 27 0 4.45 43 3 6 0 0 12 0 0 9 0 19.47 27 3 6 0 0 16 0 0 11 0 13.28 33 3 6 1 1 12 0 0 9 0 8.75 27 3 6 0 0 12 0 0 17 1 11.35 35 3 6 0 1 12 0 0 19 1 11.5 37 3 6 1 0 8 1 0 27 0 6.5 41 3 6 0 1 9 1 0 30 1 6.25 45 3 6 0 0 9 1 0 29 0 19.98 44 3 6 0 1 12 0 0 37 0 7.3 55 3 6 2 1 7 1 0 44 0 8 57 3 6 0 1 12 0 0 26 1 22.2 44 3 6 1 1 11 0 0 16 0 3.65 33 3 6 0 0 12 0 0 33 0 20.55 51 3 6 0 1 12 0 1 16 1 5.71 34 3 6 1 1 7 0 0 42 1 7 55 1 6 1 1 12 0 0 9 0 3.75 27 3 6 0 0 11 1 0 14 0 4.5 31 1 6 0 1 12 0 0 23 0 9.56 41 3 6 0 1 6 1 0 45 0 5.75 57 3 6 1 1 12 0 0 8 0 9.36 26 3 6 1 1 10 0 0 30 0 6.5 46 3 6 0 1 12 0 1 8 0 3.35 26 3 6 1 1 12 0 0 8 0 4.75 26 3 6 0 1 14 0 0 13 0 8.9 33 3 6 0 0 12 1 1 46 0 4 64 3 6 0 0 8 0 0 19 0 4.7 33 3 6 0 1 17 1 1 1 0 5 24 3 6 0 0 12 0 0 19 0 9.25 37 3 6 1 0 12 0 0 36 0 10.67 54 1 6 0 0 12 1 0 20 0 7.61 38 1 6 2 1 12 0 0 35 1 10 53 1 6 2 1 12 0 0 3 0 7.5 21 3 6 0 0 14 1 0 10 0 12.2 30 3 6 1 1 12 0 0 0 0 3.35 18 3 6 0 0 14 1 0 14 1 11 34 3 6 1 1 12 0 0 14 0 12 32 3 6 1 1 9 0 1 16 0 4.85 31 3 6 1 1 13 1 0 8 0 4.3 27 3 6 2 0 7 1 1 15 0 6 28 3 6 1 1 16 0 0 12 0 15 34 3 6 1 1 10 1 0 13 0 4.85 29 3 6 0 0 8 0 0 33 1 9 47 3 6 0 1 12 0 0 9 0 6.36 27 3 6 1 1 12 0 0 7 0 9.15 25 3 6 0 1 16 0 0 13 1 11 35 3 6 1 1 12 0 1 7 0 4.5 25 3 6 1 1 12 0 1 16 0 4.8 34 3 6 1 1 13 0 0 0 0 4 19 3 6 0 0 12 0 1 11 0 5.5 29 3 6 1 0 13 0 0 17 0 8.4 36 3 6 1 0 10 0 0 13 0 6.75 29 3 6 1 1 12 0 0 22 1 10 40 1 6 1 0 12 0 1 28 0 5 46 3 6 1 1 11 0 0 17 0 6.5 34 3 6 0 0 12 0 0 24 1 10.75 42 3 6 2 1 3 1 0 55 0 7 64 2 6 1 1 12 1 0 3 0 11.43 21 3 6 2 0 12 0 0 6 1 4 24 1 6 1 0 10 0 0 27 0 9 43 3 6 2 1 12 1 0 19 1 13 37 1 6 1 1 12 0 0 19 1 12.22 37 3 6 2 1 12 0 1 38 0 6.28 56 3 6 1 1 10 1 0 41 1 6.75 57 1 6 1 1 11 1 0 3 0 3.35 20 1 6 1 0 14 0 0 20 1 16 40 3 6 0 1 10 0 0 15 0 5.25 31 3 6 0 1 8 1 0 8 0 3.5 22 2 6 1 1 8 1 1 39 0 4.22 53 3 6 1 1 6 0 1 43 1 3 55 2 6 1 1 11 1 1 25 1 4 42 3 6 1 1 12 0 0 11 1 10 29 3 6 0 1 12 0 0 12 0 5 30 1 6 0 1 12 1 0 35 1 16 53 3 6 1 1 14 0 0 14 0 13.98 34 3 6 0 0 12 0 0 16 1 13.26 34 3 6 0 1 10 0 1 44 1 6.1 60 3 6 1 0 16 1 1 13 0 3.75 35 3 6 0 0 13 0 0 8 1 9 27 1 6 1 0 12 0 0 13 0 9.45 31 3 6 1 0 11 0 0 18 1 5.5 35 3 6 0 1 12 0 1 18 0 8.93 36 3 6 0 1 12 1 1 6 0 6.25 24 3 6 0 0 11 1 0 37 1 9.75 54 3 6 1 1 12 1 0 2 0 6.73 20 3 6 1 1 12 0 0 23 0 7.78 41 3 6 1 1 12 0 0 1 0 2.85 19 3 6 0 0 12 1 1 10 0 3.35 28 1 6 1 1 12 0 0 23 0 19.98 41 3 6 1 1 12 0 0 8 1 8.5 26 1 6 0 1 15 0 1 9 0 9.75 30 3 6 1 1 12 0 0 33 1 15 51 3 6 2 1 12 0 1 19 0 8 37 3 6 1 1 13 0 0 14 0 11.25 33 3 6 0 1 11 0 0 13 1 14 30 3 6 0 1 10 0 0 12 0 10 28 3 6 2 1 12 0 0 8 0 6.5 26 3 6 0 0 12 0 0 23 0 9.83 41 3 6 1 1 14 0 1 13 0 18.5 33 3 6 1 0 12 1 0 9 0 12.5 27 3 6 0 1 14 0 0 21 1 26 41 3 6 0 1 5 1 0 44 0 14 55 3 6 2 1 12 0 0 4 1 10.5 22 3 6 0 1 8 0 0 42 0 11 56 3 6 1 1 13 0 0 10 1 12.47 29 3 6 0 1 12 0 0 11 0 12.5 29 3 6 2 0 12 0 0 40 1 15 58 3 6 2 1 12 0 0 8 0 6 26 3 6 2 0 11 1 0 29 0 9.5 46 3 6 2 1 16 0 0 3 1 5 25 3 6 0 0 11 0 0 11 0 3.75 28 3 6 2 0 12 0 0 12 1 12.57 30 3 6 0 1 8 0 1 22 0 6.88 36 2 6 0 1 12 0 0 12 0 5.5 30 3 6 0 1 12 0 0 7 1 7 25 3 6 0 1 12 0 1 15 0 4.5 33 3 6 1 0 12 0 0 28 0 6.5 46 3 6 0 1 12 1 0 20 1 12 38 3 6 1 1 12 1 0 6 0 5 24 3 6 2 0 12 1 0 5 0 6.5 23 3 6 1 0 9 1 1 30 0 6.8 45 3 6 1 1 13 0 0 18 0 8.75 37 3 6 0 1 12 1 1 6 0 3.75 24 1 6 1 1 12 1 0 16 0 4.5 34 2 6 0 0 12 1 0 1 1 6 19 2 6 0 0 12 0 0 3 0 5.5 21 3 6 1 0 12 0 0 8 0 13 26 3 6 0 1 14 0 0 2 0 5.65 22 3 6 1 0 9 0 0 16 0 4.8 31 1 6 1 0 10 1 0 9 0 7 25 3 6 2 1 12 0 0 2 0 5.25 20 3 6 0 0 7 1 0 43 0 3.35 56 3 6 1 1 9 0 0 38 0 8.5 53 3 6 1 1 12 0 0 9 0 6 27 3 6 0 1 12 1 0 12 0 6.75 30 3 6 0 1 12 0 0 18 0 8.89 36 3 6 1 1 11 0 0 15 1 14.21 32 3 6 1 0 11 1 0 28 1 10.78 45 1 6 2 1 10 1 0 27 1 8.9 43 3 6 2 1 12 1 0 38 0 7.5 56 3 6 0 1 12 0 1 3 0 4.5 21 3 6 1 0 12 0 0 41 1 11.25 59 3 6 0 1 12 1 0 16 1 13.45 34 3 6 0 1 13 1 0 7 0 6 26 3 6 1 1 6 1 1 33 0 4.62 45 1 6 1 0 14 0 0 25 0 10.58 45 3 6 1 1 12 1 0 5 0 5 23 3 6 0 1 14 1 0 17 0 8.2 37 1 6 0 0 12 1 0 1 0 6.25 19 3 6 0 0 12 0 0 13 0 8.5 31 3 6 1 1 16 0 0 18 0 24.98 40 3 1 0 1 14 1 0 21 0 16.65 41 3 1 0 1 14 0 0 2 0 6.25 22 3 1 0 0 12 1 1 4 0 4.55 22 2 1 0 0 12 1 1 30 0 11.25 48 2 1 0 1 13 0 0 32 0 21.25 51 3 1 0 0 17 0 1 13 0 12.65 36 3 1 0 1 12 0 0 17 0 7.5 35 3 1 0 0 14 0 1 26 0 10.25 46 3 1 0 1 16 0 0 9 0 3.35 31 3 1 0 0 16 0 0 8 0 13.45 30 1 1 0 0 15 0 0 1 1 4.84 22 3 1 0 1 17 1 0 32 0 26.29 55 3 1 0 1 12 0 1 24 0 6.58 42 3 1 0 1 14 0 1 1 0 44.5 21 3 1 0 0 12 0 0 42 0 15 60 3 1 1 1 16 0 1 3 0 11.25 25 1 1 1 0 12 0 1 32 0 7 50 3 1 0 1 14 0 0 22 0 10 42 1 1 0 0 16 0 0 18 0 14.53 40 3 1 0 1 18 0 1 19 0 20 43 3 1 0 1 15 0 0 12 0 22.5 33 3 1 0 1 12 0 1 42 0 3.64 60 3 1 0 1 12 1 0 34 0 10.62 52 3 1 0 1 18 0 0 29 0 24.98 53 3 1 0 1 16 1 0 8 0 6 30 3 1 0 0 18 0 0 13 0 19 37 3 1 1 0 16 0 0 10 0 13.2 32 3 1 0 0 16 0 0 22 0 22.5 44 3 1 0 1 16 1 0 10 0 15 32 3 1 0 1 17 0 1 15 0 6.88 38 3 1 0 1 12 0 0 26 0 11.84 44 3 1 0 1 14 0 0 16 0 16.14 36 3 1 0 0 18 0 1 14 0 13.95 38 3 1 0 1 12 0 1 38 0 13.16 56 3 1 0 1 12 1 0 14 0 5.3 32 1 1 0 1 12 0 1 7 0 4.5 25 3 1 0 1 18 1 1 13 0 10 37 3 1 0 0 10 0 0 20 0 10 36 3 1 0 1 16 0 0 7 1 10 29 2 1 0 1 16 0 1 26 0 9.37 48 3 1 0 1 16 0 0 14 0 5.8 36 3 1 0 1 13 0 0 36 0 17.86 55 3 1 0 0 12 0 0 24 0 1 42 3 1 0 1 14 1 0 41 0 8.8 61 3 1 0 1 16 0 0 7 0 9 29 1 1 0 1 17 1 0 14 0 18.16 37 3 1 0 0 12 1 1 1 0 7.81 19 3 1 0 0 16 0 1 6 0 10.62 28 3 1 1 1 12 0 1 3 0 4.5 21 3 1 0 1 15 0 0 31 0 17.25 52 3 1 0 1 13 0 1 14 0 10.5 33 3 1 1 1 14 0 1 13 0 9.22 33 3 1 0 1 16 0 0 26 1 15 48 1 1 1 1 18 0 0 14 0 22.5 38 3 1 0 1 13 0 1 33 0 4.55 52 3 2 0 1 12 0 0 16 0 9 34 3 2 0 1 18 0 0 10 0 13.33 34 3 2 0 1 14 0 0 22 0 15 42 3 2 0 0 14 0 0 2 0 7.5 22 3 2 0 0 12 1 1 29 0 4.25 47 3 2 0 1 12 0 0 43 0 12.5 61 3 2 1 1 12 0 1 5 0 5.13 23 3 2 0 1 16 1 1 14 0 3.35 36 1 2 0 1 12 1 0 28 0 11.11 46 3 2 0 1 11 1 1 25 0 3.84 42 1 2 0 1 12 0 1 45 0 6.4 63 3 2 0 1 14 1 0 5 0 5.56 25 3 2 0 0 12 1 0 20 0 10 38 3 2 1 1 16 0 1 6 0 5.65 28 3 2 0 1 16 0 0 16 0 11.5 38 3 2 0 1 11 0 1 33 0 3.5 50 3 2 0 1 13 1 1 2 0 3.35 21 3 2 0 1 12 1 1 10 0 4.75 28 3 2 0 0 14 1 0 44 0 19.98 64 3 2 0 1 14 1 1 6 0 3.5 26 3 2 0 1 12 0 1 15 0 4 33 3 2 0 0 12 0 0 5 0 7 23 3 2 0 1 13 0 1 4 0 6.25 23 3 2 1 1 14 0 0 14 0 4.5 34 3 2 0 1 14 0 1 32 0 14.29 52 3 2 0 1 12 0 1 14 0 5 32 3 2 0 1 14 0 0 21 0 13.75 41 3 2 0 1 12 0 0 43 1 13.71 61 3 2 0 1 12 1 1 27 0 7.5 45 1 2 0 1 12 0 1 4 0 3.8 22 3 2 0 0 14 0 0 0 0 5 20 2 2 0 0 12 1 0 32 0 9.42 50 3 2 0 1 12 0 0 20 0 5.5 38 3 2 0 1 15 1 0 4 0 3.75 25 3 2 0 0 12 0 0 34 0 3.5 52 3 2 0 1 13 0 0 5 0 5.8 24 3 2 0 0 17 0 0 13 0 12 36 3 2 1 1 14 0 1 17 0 5 37 2 3 0 1 13 1 1 10 0 8.75 29 3 3 0 1 16 0 1 7 0 10 29 3 3 0 1 12 0 1 25 0 8.5 43 3 3 0 0 12 0 1 18 0 8.63 36 1 3 0 1 16 0 1 27 0 9 49 3 3 1 1 16 0 1 2 0 5.5 24 3 3 0 0 13 0 0 13 0 11.11 32 3 3 0 1 14 0 1 24 0 10 44 3 3 0 0 18 1 1 13 0 5.2 37 2 3 0 1 14 0 1 15 1 8 35 3 3 0 0 12 1 1 12 0 3.56 30 2 3 0 0 12 0 1 24 0 5.2 42 3 3 0 1 12 0 1 43 0 11.67 61 3 3 2 1 12 0 1 13 0 11.32 31 3 3 1 1 12 1 1 16 0 7.5 34 3 3 0 1 11 0 1 24 0 5.5 41 3 3 0 1 16 1 1 4 0 5 26 3 3 0 1 12 0 1 24 0 7.75 42 3 3 0 1 12 0 1 45 0 5.25 63 3 3 0 1 12 0 0 20 1 9 38 3 3 0 1 12 0 1 38 0 9.65 56 3 3 0 1 18 1 0 10 0 5.21 34 3 3 0 1 11 0 1 16 0 7 33 1 3 0 1 12 1 1 32 0 12.16 50 1 3 0 1 16 1 1 2 0 5.25 24 3 3 0 0 13 1 1 28 0 10.32 47 3 3 0 0 16 0 0 3 0 3.35 25 1 3 0 0 13 0 1 8 1 7.7 27 3 3 0 0 12 0 1 44 0 9.17 62 3 3 1 1 12 1 0 12 0 8.43 30 3 3 0 1 12 1 0 8 0 4 26 1 3 0 1 12 0 1 4 0 4.13 22 3 3 0 1 12 1 1 28 0 3 46 3 3 0 1 13 1 1 0 0 4.25 19 3 3 0 0 14 1 0 1 0 7.53 21 3 3 0 0 14 0 1 12 0 10.53 32 3 3 1 1 12 0 1 39 0 5 57 3 3 0 1 12 0 1 24 0 15.03 42 3 3 0 1 17 0 1 32 0 11.25 55 1 3 0 1 16 0 0 4 0 6.25 26 1 3 0 0 12 0 1 25 0 3.5 43 1 3 0 0 12 0 0 8 0 6.85 26 1 3 0 0 13 0 1 16 0 12.5 35 3 3 0 1 12 1 0 5 0 12 23 3 3 0 0 13 0 0 31 0 6 50 3 3 0 0 12 0 1 25 0 9.5 43 3 3 0 0 12 0 1 15 0 4.1 33 3 3 0 1 14 1 1 15 0 10.43 35 3 3 0 1 12 0 1 0 0 5 18 3 3 0 0 12 0 0 19 0 7.69 37 3 3 0 1 12 0 1 21 0 5.5 39 1 3 0 0 12 0 1 6 0 6.4 24 3 3 0 0 12 0 1 14 1 12.5 32 3 3 0 1 13 0 1 30 0 6.25 49 3 3 0 1 12 0 1 8 0 8 26 3 3 0 0 9 0 0 33 1 9.6 48 3 3 0 0 13 0 0 16 0 9.1 35 2 3 0 0 12 1 1 20 0 7.5 38 3 3 0 0 13 1 1 6 0 5 25 3 3 0 1 12 0 1 10 1 7 28 3 3 0 1 13 1 1 1 0 3.55 20 3 3 0 0 12 1 0 2 0 8.5 20 1 3 0 0 13 1 1 0 0 4.5 19 3 3 0 0 16 0 0 17 0 7.88 39 1 3 0 1 12 0 1 8 0 5.25 26 3 3 0 0 12 1 0 4 0 5 22 3 3 0 0 12 0 1 15 0 9.33 33 3 3 0 0 12 0 1 29 0 10.5 47 3 3 0 1 12 1 1 23 0 7.5 41 1 3 0 1 12 1 1 39 0 9.5 57 3 3 0 1 12 1 1 14 0 9.6 32 3 3 0 1 17 1 1 6 0 5.87 29 1 3 0 0 14 1 0 12 1 11.02 32 3 3 0 1 12 1 1 26 0 5 44 3 3 0 0 14 0 1 32 0 5.62 52 3 3 0 1 15 0 1 6 0 12.5 27 3 3 0 1 12 0 1 40 0 10.81 58 3 3 0 1 12 0 1 18 0 5.4 36 3 3 1 1 11 0 1 12 0 7 29 3 3 0 0 12 1 1 36 0 4.59 54 3 3 2 1 12 0 1 19 0 6 37 3 3 0 1 16 0 1 42 0 11.71 64 3 3 1 0 13 0 1 2 0 5.62 21 2 3 0 1 12 0 1 33 0 5.5 51 3 3 0 1 12 1 1 14 0 4.85 32 3 3 0 1 12 0 0 22 0 6.75 40 3 3 0 0 12 0 1 20 0 4.25 38 3 3 0 1 12 0 1 15 0 5.75 33 3 3 0 1 12 0 0 35 0 3.5 53 3 3 0 1 12 0 1 7 0 3.35 25 3 3 0 1 12 0 1 45 0 10.62 63 3 3 1 0 12 0 1 9 0 8 27 3 3 0 0 12 1 1 2 0 4.75 20 3 3 0 1 17 1 0 3 0 8.5 26 3 3 0 0 14 0 1 19 1 8.85 39 1 3 0 1 12 1 1 14 0 8 32 3 3 0 1 4 0 0 54 0 6 64 3 4 0 1 14 0 0 17 0 7.14 37 3 4 0 1 8 0 1 29 0 3.4 43 1 4 0 1 15 1 1 26 0 6 47 3 4 0 0 2 0 0 16 0 3.75 24 2 4 0 0 8 0 1 29 0 8.89 43 1 4 0 0 11 0 1 20 0 4.35 37 3 4 0 1 10 1 1 38 0 13.1 54 1 4 0 1 8 1 1 37 0 4.35 51 1 4 0 1 9 0 0 48 0 3.5 63 3 4 0 0 12 0 1 16 0 3.8 34 3 4 0 0 8 0 1 38 0 5.26 52 3 4 0 1 14 0 0 0 0 3.35 20 1 4 0 0 12 0 0 14 1 16.26 32 1 4 0 0 12 0 1 2 0 4.25 20 3 4 0 1 16 0 0 21 0 4.5 43 3 4 0 1 13 0 1 15 0 8 34 3 4 0 1 16 0 1 20 0 4 42 3 4 0 0 14 0 1 12 0 7.96 32 3 4 0 1 12 1 0 7 0 4 25 2 4 0 0 11 0 0 4 0 4.15 21 3 4 0 1 13 1 0 9 0 5.95 28 3 4 0 1 12 1 1 43 0 3.6 61 2 4 0 1 10 1 0 19 0 8.75 35 3 4 0 0 8 0 1 49 0 3.4 63 3 4 0 0 12 0 1 38 0 4.28 56 3 4 0 1 12 0 1 13 0 5.35 31 3 4 0 1 12 0 1 14 0 5 32 3 4 0 1 12 0 0 20 0 7.65 38 3 4 0 0 12 0 1 7 0 6.94 25 3 4 0 0 12 0 1 9 1 7.5 27 3 4 1 1 12 0 1 6 0 3.6 24 3 4 0 0 12 1 1 5 0 1.75 23 3 4 0 1 13 1 1 1 0 3.45 20 1 4 0 0 14 0 0 22 1 9.63 42 3 4 0 1 12 0 1 24 0 8.49 42 3 4 0 1 12 0 1 15 1 8.99 33 3 4 0 0 11 1 1 8 0 3.65 25 3 4 0 1 11 1 1 17 0 3.5 34 3 4 0 1 12 1 0 2 0 3.43 20 1 4 0 0 12 1 0 20 0 5.5 38 3 4 0 1 12 0 0 26 1 6.93 44 3 4 0 1 10 1 1 37 0 3.51 53 1 4 0 1 12 0 1 41 0 3.75 59 3 4 0 0 12 0 1 27 0 4.17 45 3 4 0 1 12 0 1 5 1 9.57 23 3 4 0 1 14 0 0 16 0 14.67 36 1 4 0 1 14 0 1 19 0 12.5 39 3 4 0 1 12 0 0 10 0 5.5 28 3 4 0 1 13 1 0 1 1 5.15 20 3 4 0 0 12 0 1 43 1 8 61 1 4 0 1 13 0 0 3 0 5.83 22 1 4 0 0 12 0 1 0 0 3.35 18 3 4 0 0 12 1 1 26 0 7 44 3 4 0 1 10 0 1 25 1 10 41 3 4 0 1 12 0 1 15 0 8 33 3 4 0 1 14 1 1 10 0 6.88 30 3 4 0 0 11 0 1 45 1 5.55 62 3 4 0 0 11 0 0 3 0 7.5 20 1 4 0 0 8 0 0 47 1 8.93 61 2 4 0 1 16 0 1 6 0 9 28 1 4 0 1 10 1 1 33 0 3.5 49 3 4 0 0 16 0 0 3 0 5.77 25 3 4 1 0 14 0 0 4 1 25 24 2 4 0 0 14 0 0 34 1 6.85 54 1 4 0 1 11 1 0 39 0 6.5 56 3 4 0 1 12 1 1 17 0 3.75 35 3 4 0 1 9 0 0 47 1 3.5 62 3 4 0 1 11 0 0 2 0 4.5 19 3 4 0 0 13 1 0 0 0 2.01 19 3 4 0 0 14 0 1 24 0 4.17 44 3 4 0 0 12 0 0 25 1 13 43 1 4 0 1 14 0 1 6 0 3.98 26 3 4 0 0 12 0 1 10 0 7.5 28 3 4 0 0 12 0 1 33 0 13.12 51 1 4 0 1 12 0 0 12 0 4 30 3 4 0 0 12 1 1 9 0 3.95 27 3 4 0 1 11 1 0 18 1 13 35 3 4 0 1 12 0 0 10 0 9 28 3 4 0 1 8 1 1 45 0 4.55 59 3 4 0 0 9 0 1 46 1 9.5 61 3 4 0 1 7 1 0 14 0 4.5 27 2 4 0 1 11 0 1 36 0 8.75 53 3 4 0 0 13 0 0 34 1 10 53 3 5 2 1 18 0 0 15 0 18 39 3 5 0 1 17 0 0 31 0 24.98 54 3 5 1 1 16 0 1 6 0 12.05 28 3 5 1 0 14 1 0 15 0 22 35 3 5 0 1 12 0 0 30 0 8.75 48 3 5 0 1 18 0 0 8 0 22.2 32 3 5 0 1 18 0 0 5 0 17.25 29 3 5 1 1 17 0 1 3 1 6 26 3 5 0 0 13 1 0 17 0 8.06 36 3 5 0 1 16 0 0 5 1 9.24 27 1 5 1 1 14 0 1 10 0 12 30 3 5 0 1 15 0 1 33 0 10.61 54 3 5 0 0 18 0 0 3 0 5.71 27 3 5 0 1 16 0 1 0 0 10 18 3 5 0 0 16 1 0 13 0 17.5 35 1 5 0 1 18 0 0 12 0 15 36 3 5 0 1 16 0 1 6 0 7.78 28 3 5 0 1 17 0 0 7 0 7.8 30 3 5 0 1 16 1 0 14 1 10 36 3 5 0 1 17 0 1 5 0 24.98 28 3 5 0 0 15 1 1 10 0 10.28 31 3 5 0 1 18 0 1 11 0 15 35 3 5 0 1 17 0 1 24 0 12 47 3 5 0 1 16 0 0 9 0 10.58 31 3 5 1 0 18 1 0 12 0 5.85 36 3 5 0 1 18 0 0 19 0 11.22 43 3 5 0 1 14 0 1 14 0 8.56 34 3 5 0 1 16 0 1 17 0 13.89 39 3 5 1 0 18 1 0 7 0 5.71 31 3 5 0 0 18 0 0 7 0 15.79 31 3 5 0 1 16 0 1 22 0 7.5 44 3 5 0 1 12 0 1 28 0 11.25 46 3 5 0 1 16 0 1 16 0 6.15 38 3 5 0 0 16 1 0 16 0 13.45 38 1 5 0 0 16 0 1 7 0 6.25 29 3 5 0 1 12 0 1 11 0 6.5 29 3 5 0 0 12 0 1 11 0 12 29 3 5 0 1 12 0 1 16 0 8.5 34 3 5 0 0 18 0 0 33 1 8 57 3 5 0 0 12 1 1 21 0 5.75 39 3 5 0 1 16 0 0 4 0 15.73 26 3 5 1 1 15 0 0 13 0 9.86 34 3 5 0 1 18 0 0 14 1 13.51 38 3 5 0 1 16 0 1 10 0 5.4 32 3 5 0 1 18 1 0 14 0 6.25 38 3 5 0 1 16 1 0 29 0 5.5 51 3 5 0 1 12 0 0 4 0 5 22 2 5 0 0 18 0 0 27 0 6.25 51 1 5 0 1 12 0 0 3 0 5.75 21 3 5 0 1 16 1 0 14 1 20.5 36 3 5 0 1 14 0 0 0 0 5 20 3 5 2 1 18 0 0 33 0 7 57 3 5 0 1 16 1 0 38 0 18 60 3 5 0 1 18 0 1 18 1 12 42 3 5 0 1 17 0 0 3 0 20.4 26 3 5 1 0 18 0 1 40 0 22.2 64 3 5 0 0 14 0 0 19 0 16.42 39 3 5 1 0 14 0 1 4 0 8.63 24 3 5 0 0 16 0 1 11 0 19.38 33 3 5 0 1 16 0 1 16 0 14 38 3 5 0 1 14 0 0 22 0 10 42 3 5 0 1 17 0 1 13 1 15.95 36 3 5 0 0 16 1 1 28 1 20 50 3 5 0 1 16 0 1 10 0 10 32 3 5 0 1 16 1 1 5 0 24.98 27 3 5 0 0 15 0 0 5 0 11.25 26 3 5 0 0 18 0 1 37 0 22.83 61 3 5 1 0 17 0 1 26 1 10.2 49 3 5 0 1 16 1 1 4 0 10 26 3 5 0 1 18 0 1 31 1 14 55 3 5 0 0 17 0 1 13 1 12.5 36 3 5 0 1 12 0 1 42 0 5.79 60 3 5 0 1 17 0 0 18 0 24.98 41 2 5 0 1 12 0 1 3 0 4.35 21 3 5 0 1 17 0 1 10 0 11.25 33 3 5 0 0 16 0 1 10 1 6.67 32 3 5 0 0 16 0 1 17 0 8 39 2 5 0 1 18 0 0 7 0 18.16 31 3 5 0 1 16 0 1 14 0 12 36 3 5 0 1 16 0 1 22 1 8.89 44 3 5 0 1 17 0 1 14 0 9.5 37 3 5 0 1 16 0 0 11 0 13.65 33 3 5 0 1 18 0 0 23 1 12 47 3 5 0 1 12 0 0 39 1 15 57 3 5 0 1 16 0 0 15 0 12.67 37 3 5 0 1 14 0 1 15 0 7.38 35 2 5 0 0 16 0 0 10 0 15.56 32 3 5 0 0 12 1 1 25 0 7.45 43 3 5 0 0 14 0 1 12 0 6.25 32 3 5 0 1 16 1 1 7 0 6.25 29 2 5 0 1 17 0 0 7 1 9.37 30 3 5 0 1 16 0 0 17 0 22.5 39 3 5 1 1 16 0 0 10 1 7.5 32 3 5 0 1 17 1 0 2 0 7 25 3 5 0 1 9 1 1 34 1 5.75 49 1 5 0 1 15 0 1 11 0 7.67 32 3 5 0 1 15 0 0 10 0 12.5 31 3 5 0 0 12 1 0 12 0 16 30 3 5 0 1 16 0 1 6 1 11.79 28 3 5 0 0 18 0 0 5 0 11.36 29 3 5 0 0 12 0 1 33 0 6.1 51 1 5 0 1 17 0 1 25 1 23.25 48 1 5 0 1 12 1 0 13 1 19.88 31 3 5 0 1 16 0 0 33 0 15.38 55 3 5 1 1 Therese Stukel Dartmouth Hitchcock Medical Center One Medical Center Dr. Lebanon, NH 03756 e-mail: stukel@dartmouth.edu |

Python files

stsimple.py

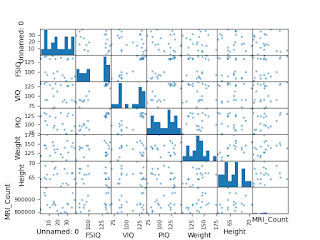

| #################### Statistics in Python (including 2-sample t-test: testing for difference across populations) #################### #Download input data file from the following website and then save on your working directory. #https://scipy-lectures.org/_downloads/brain_size.csv #https://scipy-lectures.org/_downloads/iris.csv #http://lib.stat.cmu.edu/datasets/CPS_85_Wages #Reference #https://scipy-lectures.org/packages/statistics/index.html ##### import import numpy as np from scipy import stats import matplotlib.pyplot as plt # import pandas as pd #from pandas.tools import plotting from pandas.plotting import scatter_matrix from statsmodels.formula.api import ols #import statsmodels.api as sm import statsmodels.formula.api as sm import seaborn import urllib import os ########## 3.1.1. Data representation and interaction ##### 3.1.1.1. Data as a table ##### 3.1.1.2. The pandas data-frame ### pandas #read a csv file with separators ; and replace . by NaN. # ''' data is pandas.DataFrame, which is is the Python equivalent of the spreadsheet table. It is different from a 2D numpy array as it has named columns, can contain a mixture of different data types by column, and has elaborate selection and pivotal mechanisms. ''' data = pd.read_csv('brain_size.csv', sep=';', na_values=".") # print(data) ''' Unnamed: 0 Gender FSIQ VIQ PIQ Weight Height MRI_Count 0 1 Female 133 132 124 118.0 64.5 816932 1 2 Male 140 150 124 NaN 72.5 1001121 ... 39 40 Male 89 91 89 179.0 75.5 935863 ''' print(type(data)) #<class 'pandas.core.frame.DataFrame'> # # Missing values #The weight of the second individual is missing in the CSV file. If we don’t specify the missing value (NA = not available) marker, we will not be able to do statistical analysis. ### numpy arrays t = np.linspace(-6, 6, 20) print(t) ''' [-6. -5.36842105 -4.73684211 -4.10526316 -3.47368421 -2.84210526 -2.21052632 -1.57894737 -0.94736842 -0.31578947 0.31578947 0.94736842 1.57894737 2.21052632 2.84210526 3.47368421 4.10526316 4.73684211 5.36842105 6. ] ''' print(type(t)) #<class 'numpy.ndarray'> sin_t = np.sin(t) print(type(sin_t)) #<class 'numpy.ndarray'> cos_t = np.cos(t) print(type(cos_t)) #<class 'numpy.ndarray'> ##### conversion from numpy.ndarray to pandas.DataFrame print(pd.DataFrame({'t': t, 'sin': sin_t, 'cos': cos_t})) ##### manipulating pandas.DataFrame #print(type(data)) #<class 'pandas.core.frame.DataFrame'> print(data.shape) #(40, 8) print(data.columns) ''' Index(['Unnamed: 0', 'Gender', 'FSIQ', 'VIQ', 'PIQ', 'Weight', 'Height', 'MRI_Count'], dtype='object') ''' print(data['Gender']) ''' 0 Female 1 Male ... 39 Male Name: Gender, dtype: object ''' # Simpler selector print(data[data['Gender'] == 'Female']['VIQ'].mean()) #109.45 #pandas.DataFrame.describe() #a quick view on a large dataframe print(data.describe()) ''' Unnamed: 0 FSIQ VIQ ... Weight Height MRI_Count count 40.000000 40.000000 40.000000 ... 38.000000 39.000000 4.000000e+01 mean 20.500000 113.450000 112.350000 ... 151.052632 68.525641 9.087550e+05 std 11.690452 24.082071 23.616107 ... 23.478509 3.994649 7.228205e+04 min 1.000000 77.000000 71.000000 ... 106.000000 62.000000 7.906190e+05 25% 10.750000 89.750000 90.000000 ... 135.250000 66.000000 8.559185e+05 50% 20.500000 116.500000 113.000000 ... 146.500000 68.000000 9.053990e+05 75% 30.250000 135.500000 129.750000 ... 172.000000 70.500000 9.500780e+05 max 40.000000 144.000000 150.000000 ... 192.000000 77.000000 1.079549e+06 [8 rows x 7 columns] ''' # print(data[data['Gender'] == 'Female'].describe()) ''' Unnamed: 0 FSIQ VIQ PIQ Weight Height MRI_Count count 20.000000 20.000000 20.000000 20.000000 20.000000 20.000000 20.00000 mean 19.650000 111.900000 109.450000 110.450000 137.200000 65.765000 862654.60000 std 11.356774 23.686327 21.670924 21.946046 16.953807 2.288248 55893.55578 min 1.000000 77.000000 71.000000 72.000000 106.000000 62.000000 790619.00000 25% 10.250000 90.250000 90.000000 93.000000 125.750000 64.500000 828062.00000 50% 18.000000 115.500000 116.000000 115.000000 138.500000 66.000000 855365.00000 75% 29.250000 133.000000 129.000000 128.750000 146.250000 66.875000 882668.50000 max 38.000000 140.000000 136.000000 147.000000 175.000000 70.500000 991305.00000 ''' # Note that mean of VIQ for data[data['Gender'] == 'Female'] is 109.45 as confirmed above. groupby_gender = data.groupby('Gender') print(groupby_gender) #<pandas.core.groupby.generic.DataFrameGroupBy object at 0x111769580> # for gender, value in groupby_gender['VIQ']: print((gender, value.mean())) ''' ('Female', 109.45) ('Male', 115.25) ''' print(groupby_gender.mean()) ''' Unnamed: 0 FSIQ VIQ PIQ Weight Height MRI_Count Gender Female 19.65 111.9 109.45 110.45 137.200000 65.765000 862654.6 Male 21.35 115.0 115.25 111.60 166.444444 71.431579 954855.4 ''' #Other common grouping functions are median, count or sum. #Exercise # # #What is the mean value for VIQ for the full population? print(data.mean()) ''' Unnamed: 0 20.500000 FSIQ 113.450000 VIQ 112.350000 PIQ 111.025000 Weight 151.052632 Height 68.525641 MRI_Count 908755.000000 dtype: float64 ''' print(data['VIQ'].mean()) #112.35 # # #How many males/females were included in this study? #Hint use ‘tab completion’ to find out the methods that can be called, instead of ‘mean’ in the above example. print(groupby_gender.count()) ''' Unnamed: 0 FSIQ VIQ PIQ Weight Height MRI_Count Gender Female 20 20 20 20 20 20 20 Male 20 20 20 20 18 19 20 ''' #NaN is NOT counted in Weight and Height for Male. # # #What is the average value of MRI counts expressed in log units, for males and females? print(groupby_gender.mean()) ''' Unnamed: 0 FSIQ VIQ PIQ Weight Height MRI_Count Gender Female 19.65 111.9 109.45 110.45 137.200000 65.765000 862654.6 Male 21.35 115.0 115.25 111.60 166.444444 71.431579 954855.4 ''' print(groupby_gender['MRI_Count'].mean()) ''' Gender Female 862654.6 Male 954855.4 Name: MRI_Count, dtype: float64 ''' # groupby_gender.boxplot(column=['FSIQ', 'VIQ', 'PIQ']) plt.savefig("figure_1.png") #plt.show() #plt.close() ### Plotting data #plotting.scatter_matrix(data[['Weight', 'Height', 'MRI_Count']]) scatter_matrix(data[['Weight', 'Height', 'MRI_Count']]) plt.savefig("figure_2.png") #plt.show() #plt.close() scatter_matrix(data[['PIQ', 'VIQ', 'FSIQ']]) plt.savefig("figure_3.png") #plt.show() #plt.close() #Exercise #Plot the scatter matrix for males only, and for females only. Do you think that the 2 sub-populations correspond to gender? scatter_matrix(data[data['Gender'] == 'Male']) plt.savefig("figure_4.png") #plt.show() #plt.close() scatter_matrix(data[data['Gender'] == 'Female']) plt.savefig("figure_5.png") #plt.show() #plt.close() ########## 3.1.2. Hypothesis testing: comparing two groups ##### 3.1.2.1. Student’s t-test: the simplest statistical test ### 1-sample t-test: testing the value of a population mean #scipy.stats.ttest_1samp() tests if the population mean of data is likely to be equal to a given value (technically if observations are drawn from a Gaussian distributions of given population mean). It returns the T statistic, and the p-value (see the function’s help): #mean of data['VIQ'] is equal to 0? #stats.ttest_1samp(data['VIQ'], 0) print(stats.ttest_1samp(data['VIQ'], 0)) #Ttest_1sampResult(statistic=30.088099970849328, pvalue=1.3289196468728067e-28) #t-value (statistic) and p-value (pvalue) # With a p-value of 10^-28 we can claim that the population mean for the IQ (VIQ measure) is NOT 0. print(stats.ttest_1samp(data['VIQ'], 100)) #Ttest_1sampResult(statistic=3.3074146385401786, pvalue=0.002030117404781822) # With a p-value of 0.002 we can claim that the population mean for the IQ (VIQ measure) is NOT 100. print(stats.ttest_1samp(data['VIQ'], 105)) #Ttest_1sampResult(statistic=1.968380371924721, pvalue=0.05616184962448135) # With a p-value of 0.056 > 0.05 we CANNOT claim that the population mean for the IQ (VIQ measure) is NOT 105. print(stats.ttest_1samp(data['VIQ'], 112.35)) #Ttest_1sampResult(statistic=0.0, pvalue=1.0) # With a p-value of 1.0 we CANNOT claim that the population mean for the IQ (VIQ measure) is NOT 112.35. # Actually, its population mean is 112.35 as shown above. ### 2-sample t-test: testing for difference across populations # #We have seen above that the mean VIQ in the male and female populations were different. To test if this is significant, we do a 2-sample t-test with scipy.stats.ttest_ind(): female_viq = data[data['Gender'] == 'Female']['VIQ'] male_viq = data[data['Gender'] == 'Male']['VIQ'] #stats.ttest_ind(female_viq, male_viq) print(stats.ttest_ind(female_viq, male_viq)) #Ttest_indResult(statistic=-0.7726161723275011, pvalue=0.44452876778583217) # #The test measures whether the average (expected) value differs significantly across samples. #If we observe a large p-value, for example larger than 0.05 or 0.10, then we CANNOT REJECT the null hypothesis of IDENTICAL average scores. #In this case, we CANNOT say that differences in averages of VIQ for Male and Female are statistically significant. # #On the contraty, if the p-value is smaller than the threshold, e.g. 1%, 5% or 10%, then we can reject the null hypothesis of equal averages. #(i.e., The difference is statistically significant.) # #See the following website for more detail. #https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.ttest_ind.html#scipy.stats.ttest_ind ##### 3.1.2.2. Paired tests: repeated measurements on the same individuals #PIQ, VIQ, and FSIQ give 3 measures of IQ. Let us test if FISQ and PIQ are significantly different. We can use a 2 sample test: #stats.ttest_ind(data['FSIQ'], data['PIQ']) print(stats.ttest_ind(data['FSIQ'], data['PIQ']) ) #Ttest_indResult(statistic=0.465637596380964, pvalue=0.6427725009414841) #groupby_gender.boxplot(column=['FSIQ', 'VIQ', 'PIQ']) #groupby_gender.boxplot(column=['FSIQ', 'PIQ']) #print(type(groupby_gender)) #<class 'pandas.core.groupby.generic.DataFrameGroupBy'> # #pandas.DataFrame.boxplot # #scatter_matrix(data[['Weight', 'Height', 'MRI_Count']]) #scatter_matrix(data[['PIQ', 'VIQ', 'FSIQ']]) # data[['FSIQ', 'PIQ']].boxplot() # plt.savefig("figure_6.png") #plt.show() #plt.close() #The problem with this approach is that it forgets that there are links between observations: FSIQ and PIQ are measured on the same individuals. Thus the variance due to inter-subject variability is confounding, and can be removed, using a “paired test”, or “repeated measures test”: #stats.ttest_rel(data['FSIQ'], data['PIQ']) print(stats.ttest_rel(data['FSIQ'], data['PIQ'])) #Ttest_relResult(statistic=1.7842019405859857, pvalue=0.08217263818364236) #This is equivalent to a 1-sample test on the difference: # #stats.ttest_1samp(data['FSIQ'] - data['PIQ'], 0) print(stats.ttest_1samp(data['FSIQ'] - data['PIQ'], 0) ) #Ttest_1sampResult(statistic=1.7842019405859857, pvalue=0.08217263818364236) # print(data['FSIQ'] - data['PIQ']) ''' 0 9 1 16 2 -11 ... 39 0 dtype: int64 ''' # print(type(data['FSIQ'] - data['PIQ'])) #<class 'pandas.core.series.Series'> # print(type(pd.DataFrame(data['FSIQ'] - data['PIQ']))) #<class 'pandas.core.frame.DataFrame'> # #print(pd.DataFrame(data['FSIQ'] - data['PIQ']).columns) # print(pd.DataFrame(data['FSIQ'] - data['PIQ']).columns.values) #[0] # print(type(pd.DataFrame(data['FSIQ'] - data['PIQ']).columns.values)) #<class 'numpy.ndarray'> # #print(pd.DataFrame(data['FSIQ'] - data['PIQ']).rename(columns={'0': 'FSIQ - PIQ'}).columns.values) print(pd.DataFrame(data['FSIQ'] - data['PIQ']).rename(columns={0: 'FSIQ - PIQ'}).columns.values) #['FSIQ - PIQ'] # #pd.DataFrame(data['FSIQ'] - data['PIQ']).boxplot() #pd.DataFrame(data['FSIQ'] - data['PIQ']).boxplot() pd.DataFrame(data['FSIQ'] - data['PIQ']).rename(columns={0: 'FSIQ - PIQ'}).boxplot() plt.savefig("figure_7.png") #plt.show() #plt.close() print(stats.wilcoxon(data['FSIQ'], data['PIQ'])) #WilcoxonResult(statistic=274.5, pvalue=0.10659492713506856) #####Exercise # # ###Test the difference between weights in males and females. # #female_weight = data[data['Gender'] == 'Female']['Weight'] female_weight = data[data['Gender'] == 'Female']['Weight'].dropna() #male_weight = data[data['Gender'] == 'Male']['Weight'] male_weight = data[data['Gender'] == 'Male']['Weight'].dropna() # print(female_weight) print(male_weight) # #stats.ttest_ind(female_viq, male_weight) print(stats.ttest_ind(female_weight, male_weight)) #Ttest_indResult(statistic=-4.870950921940696, pvalue=2.227293018362118e-05) # #The test measures whether the average (expected) value differs significantly across samples. #If we observe a large p-value, for example larger than 0.05 or 0.10, then we CANNOT REJECT the null hypothesis of IDENTICAL average scores. #On the contraty, if the p-value is smaller than the threshold, e.g. 1%, 5% or 10%, then we can reject the null hypothesis of equal averages. #(i.e., The difference is statistically significant.) # #In this case, since pvalue < 0.01 = 1%, we CAN say that differences in averages of Weight for Male and Female are statistically significant. # # ###Use non parametric statistics to test the difference between VIQ in males and females. ###Conclusion: we find that the data does not support the hypothesis that males and females have different VIQ. # #(omitted) ########## 3.1.3. Linear models, multiple factors, and analysis of variance #####3.1.3.1. “formulas” to specify statistical models in Python ### A simple linear regression ''' Given two set of observations, x and y, we want to test the hypothesis that y is a linear function of x. In other terms: y = x * \textit{coef} + \textit{intercept} + e where e is observation noise. We will use the statsmodels module to: 1. Fit a linear model. We will use the simplest strategy, ordinary least squares (OLS). 2. Test that coef is non zero. ''' x = np.linspace(-5, 5, 20) np.random.seed(1) # normal distributed noise y = -5 + 3*x + 4 * np.random.normal(size=x.shape) # Create a data frame containing all the relevant variables data = pd.DataFrame({'x': x, 'y': y}) print(data) plt.scatter(data['x'], data['y']) plt.savefig("figure_8.png") #plt.show() #plt.close() #Then we specify an OLS model and fit it: model = ols("y ~ x", data).fit() print(model.summary()) ''' OLS Regression Results ============================================================================== Dep. Variable: y R-squared: 0.804 Model: OLS Adj. R-squared: 0.794 Method: Least Squares F-statistic: 74.03 Date: Tue, 09 Jun 2020 Prob (F-statistic): 8.56e-08 Time: 10:34:33 Log-Likelihood: -57.988 No. Observations: 20 AIC: 120.0 Df Residuals: 18 BIC: 122.0 Df Model: 1 Covariance Type: nonrobust ============================================================================== coef std err t P>|t| [0.025 0.975] ------------------------------------------------------------------------------ Intercept -5.5335 1.036 -5.342 0.000 -7.710 -3.357 x 2.9369 0.341 8.604 0.000 2.220 3.654 ============================================================================== Omnibus: 0.100 Durbin-Watson: 2.956 Prob(Omnibus): 0.951 Jarque-Bera (JB): 0.322 Skew: -0.058 Prob(JB): 0.851 Kurtosis: 2.390 Cond. No. 3.03 ============================================================================== Warnings: [1] Standard Errors assume that the covariance matrix of the errors is correctly specified. ''' ###Exercise #Retrieve the estimated parameters from the model above. Hint: use tab-completion to find the relevent attribute. #See coef of Intercept and x, then see also t and P>|t| (=0.000 < 0.01) for Intercept and x. ### Categorical variables: comparing groups or multiple categories data = pd.read_csv('brain_size.csv', sep=';', na_values=".") # print(data) ''' Unnamed: 0 Gender FSIQ VIQ PIQ Weight Height MRI_Count 0 1 Female 133 132 124 118.0 64.5 816932 1 2 Male 140 150 124 NaN 72.5 1001121 ... 39 40 Male 89 91 89 179.0 75.5 935863 ''' print(type(data)) #<class 'pandas.core.frame.DataFrame'> model = ols("VIQ ~ Gender + 1", data).fit() #Intercept: We can remove the intercept using - 1 in the formula, or force the use of an intercept using + 1. # print(model.summary()) ''' OLS Regression Results ============================================================================== Dep. Variable: VIQ R-squared: 0.015 Model: OLS Adj. R-squared: -0.010 Method: Least Squares F-statistic: 0.5969 Date: Tue, 09 Jun 2020 Prob (F-statistic): 0.445 Time: 10:43:06 Log-Likelihood: -182.42 No. Observations: 40 AIC: 368.8 Df Residuals: 38 BIC: 372.2 Df Model: 1 Covariance Type: nonrobust ================================================================================== coef std err t P>|t| [0.025 0.975] ---------------------------------------------------------------------------------- Intercept 109.4500 5.308 20.619 0.000 98.704 120.196 Gender[T.Male] 5.8000 7.507 0.773 0.445 -9.397 20.997 ============================================================================== Omnibus: 26.188 Durbin-Watson: 1.709 Prob(Omnibus): 0.000 Jarque-Bera (JB): 3.703 Skew: 0.010 Prob(JB): 0.157 Kurtosis: 1.510 Cond. No. 2.62 ============================================================================== Warnings: [1] Standard Errors assume that the covariance matrix of the errors is correctly specified. ''' #An integer column can be forced to be treated as categorical using: model = ols('VIQ ~ C(Gender)', data).fit() print(model.summary()) ''' OLS Regression Results ============================================================================== Dep. Variable: VIQ R-squared: 0.015 Model: OLS Adj. R-squared: -0.010 Method: Least Squares F-statistic: 0.5969 Date: Tue, 09 Jun 2020 Prob (F-statistic): 0.445 Time: 10:45:38 Log-Likelihood: -182.42 No. Observations: 40 AIC: 368.8 Df Residuals: 38 BIC: 372.2 Df Model: 1 Covariance Type: nonrobust ===================================================================================== coef std err t P>|t| [0.025 0.975] ------------------------------------------------------------------------------------- Intercept 109.4500 5.308 20.619 0.000 98.704 120.196 C(Gender)[T.Male] 5.8000 7.507 0.773 0.445 -9.397 20.997 ============================================================================== Omnibus: 26.188 Durbin-Watson: 1.709 Prob(Omnibus): 0.000 Jarque-Bera (JB): 3.703 Skew: 0.010 Prob(JB): 0.157 Kurtosis: 1.510 Cond. No. 2.62 ============================================================================== Warnings: [1] Standard Errors assume that the covariance matrix of the errors is correctly specified. ''' #####Link to t-tests between different FSIQ and PIQ #To compare different types of IQ, we need to create a “long-form” table, listing IQs, where the type of IQ is indicated by a categorical variable: data_fisq = pd.DataFrame({'iq': data['FSIQ'], 'type': 'fsiq'}) data_piq = pd.DataFrame({'iq': data['PIQ'], 'type': 'piq'}) data_long = pd.concat((data_fisq, data_piq)) print(data_long) ''' iq type 0 133 fsiq 1 140 fsiq 2 139 fsiq 3 133 fsiq 4 137 fsiq .. ... ... 35 128 piq 36 124 piq 37 94 piq 38 74 piq 39 89 piq [80 rows x 2 columns] ''' model = ols("iq ~ type", data_long).fit() print(model.summary()) ''' OLS Regression Results ============================================================================== Dep. Variable: iq R-squared: 0.003 Model: OLS Adj. R-squared: -0.010 Method: Least Squares F-statistic: 0.2168 Date: Tue, 09 Jun 2020 Prob (F-statistic): 0.643 Time: 10:48:47 Log-Likelihood: -364.35 No. Observations: 80 AIC: 732.7 Df Residuals: 78 BIC: 737.5 Df Model: 1 Covariance Type: nonrobust =============================================================================== coef std err t P>|t| [0.025 0.975] ------------------------------------------------------------------------------- Intercept 113.4500 3.683 30.807 0.000 106.119 120.781 type[T.piq] -2.4250 5.208 -0.466 0.643 -12.793 7.943 ============================================================================== Omnibus: 164.598 Durbin-Watson: 1.531 Prob(Omnibus): 0.000 Jarque-Bera (JB): 8.062 Skew: -0.110 Prob(JB): 0.0178 Kurtosis: 1.461 Cond. No. 2.62 ============================================================================== Warnings: [1] Standard Errors assume that the covariance matrix of the errors is correctly specified. ''' print(stats.ttest_ind(data['FSIQ'], data['PIQ']) ) #Ttest_indResult(statistic=0.465637596380964, pvalue=0.6427725009414841) #####3.1.3.2. Multiple Regression: including multiple factors #Consider a linear model explaining a variable z (the dependent variable) with 2 variables x and y: #z = x \, c_1 + y \, c_2 + i + e #Such a model can be seen in 3D as fitting a plane to a cloud of (x, y, z) points. data = pd.read_csv('iris.csv') model = ols('sepal_width ~ name + petal_length', data).fit() print(model.summary()) ''' OLS Regression Results ============================================================================== Dep. Variable: sepal_width R-squared: 0.478 Model: OLS Adj. R-squared: 0.468 Method: Least Squares F-statistic: 44.63 Date: Tue, 09 Jun 2020 Prob (F-statistic): 1.58e-20 Time: 11:16:28 Log-Likelihood: -38.185 No. Observations: 150 AIC: 84.37 Df Residuals: 146 BIC: 96.41 Df Model: 3 Covariance Type: nonrobust ====================================================================================== coef std err t P>|t| [0.025 0.975] -------------------------------------------------------------------------------------- Intercept 2.9813 0.099 29.989 0.000 2.785 3.178 name[T.versicolor] -1.4821 0.181 -8.190 0.000 -1.840 -1.124 name[T.virginica] -1.6635 0.256 -6.502 0.000 -2.169 -1.158 petal_length 0.2983 0.061 4.920 0.000 0.178 0.418 ============================================================================== Omnibus: 2.868 Durbin-Watson: 1.753 Prob(Omnibus): 0.238 Jarque-Bera (JB): 2.885 Skew: -0.082 Prob(JB): 0.236 Kurtosis: 3.659 Cond. No. 54.0 ============================================================================== Warnings: [1] Standard Errors assume that the covariance matrix of the errors is correctly specified. ''' #scatter_matrix(data[['sepal_length', 'sepal_width', 'petal_length', 'petal_width']]) # # Express the names as categories categories = pd.Categorical(data['name']) # # The parameter 'c' is passed to plt.scatter and will control the color #plotting.scatter_matrix(data, c=categories.codes, marker='o') scatter_matrix(data, c=categories.codes, marker='o') # fig = plt.gcf() fig.suptitle("blue: setosa, green: versicolor, red: virginica", size=13) # plt.savefig("figure_9.png") #plt.show() #plt.close() ##### 3.1.3.3. Post-hoc hypothesis testing: analysis of variance (ANOVA) ''' In the above iris example, we wish to test if the petal length is different between versicolor and virginica, after removing the effect of sepal width. This can be formulated as testing the difference between the coefficient associated to versicolor and virginica in the linear model estimated above (it is an Analysis of Variance, ANOVA). For this, we write a vector of ‘contrast’ on the parameters estimated: we want to test "name[T.versicolor] - name[T.virginica]", with an F-test: ''' print(model.f_test([0, 1, -1, 0])) #<F test: F=array([[3.24533535]]), p=0.07369058781701113, df_denom=146, df_num=1> # #Is this difference significant? # #No, as p=0.073 > 0.050 ########## 3.1.4. More visualization: seaborn for statistical exploration #data = pd.read_csv('CPS_85_Wages.csv', sep=',', na_values=".") data = pd.read_csv('CPS_85_Wages.csv', sep=',') print(data) ''' EDUCATION SOUTH SEX EXPERIENCE UNION WAGE AGE RACE OCCUPATION SECTOR MARR 0 8 0 1 21 0 5.10 35 2 6 1 1 1 9 0 1 42 0 4.95 57 3 6 1 1 2 12 0 0 1 0 6.67 19 3 6 1 0 3 12 0 0 4 0 4.00 22 3 6 0 0 4 12 0 0 17 0 7.50 35 3 6 0 1 .. ... ... ... ... ... ... ... ... ... ... ... 529 18 0 0 5 0 11.36 29 3 5 0 0 530 12 0 1 33 0 6.10 51 1 5 0 1 531 17 0 1 25 1 23.25 48 1 5 0 1 532 12 1 0 13 1 19.88 31 3 5 0 1 533 16 0 0 33 0 15.38 55 3 5 1 1 [534 rows x 11 columns] ''' ##### 3.1.4.1. Pairplot: scatter matrices #We can easily have an intuition on the interactions between continuous variables using seaborn.pairplot() to display a scatter matrix: seaborn.pairplot(data, vars=['WAGE', 'AGE', 'EDUCATION'], kind='reg') # plt.savefig("figure_10.png") #plt.show() #plt.close() #Categorical variables can be plotted as the hue: # seaborn.pairplot(data, vars=['WAGE', 'AGE', 'EDUCATION'], kind='reg', hue='SEX') # plt.savefig("figure_11.png") #plt.show() #plt.close() #Look and feel and matplotlib settings #Seaborn changes the default of matplotlib figures to achieve a more “modern”, “excel-like” look. It does that upon import. You can reset the default using: # ##plt.rcdefaults() ##This does NOT work. # #plt.savefig("figure_12.png") #plt.show() #plt.close() #####3.1.4.2. lmplot: plotting a univariate regression seaborn.lmplot(y='WAGE', x='EDUCATION', data=data) plt.savefig("figure_12.png") #plt.show() #plt.close() ##########3.1.5. Testing for interactions #result = sm.ols(formula='wage ~ education + gender + education * gender', data=data).fit() #result = ols(formula='wage ~ education + gender + education * gender', data=data).fit() #result = ols(formula='WAGE ~ EDUCATION + SEX + EDUCATION * SEX', data=data).fit() #print(result.summary()) ''' if not os.path.exists('wages.txt'): # Download the file if it is not present urllib.urlretrieve('http://lib.stat.cmu.edu/datasets/CPS_85_Wages', 'wages.txt') ''' # EDUCATION: Number of years of education # SEX: 1=Female, 0=Male # WAGE: Wage (dollars per hour) data = pd.read_csv('wages.txt', skiprows=27, skipfooter=6, sep=None, header=None, names=['education', 'gender', 'wage'], usecols=[0, 2, 5], ) print(data) # Convert genders to strings (this is particulary useful so that the # statsmodels formulas detects that gender is a categorical variable) #import numpy as np data['gender'] = np.choose(data.gender, ['male', 'female']) # Log-transform the wages, because they typically are increased with # multiplicative factors data['wage'] = np.log10(data['wage']) #simple plotting #import seaborn # Plot 2 linear fits for male and female. seaborn.lmplot(y='wage', x='education', hue='gender', data=data) plt.savefig("figure_13.png") #plt.show() #plt.close() # Note that this model is not the plot displayed above: it is one # joined model for male and female, not separate models for male and # female. The reason is that a single model enables statistical testing result = sm.ols(formula='wage ~ education + gender', data=data).fit() print(result.summary()) ''' OLS Regression Results ============================================================================== Dep. Variable: wage R-squared: 0.193 Model: OLS Adj. R-squared: 0.190 Method: Least Squares F-statistic: 63.42 Date: Tue, 09 Jun 2020 Prob (F-statistic): 2.01e-25 Time: 12:05:35 Log-Likelihood: 86.654 No. Observations: 534 AIC: -167.3 Df Residuals: 531 BIC: -154.5 Df Model: 2 Covariance Type: nonrobust ================================================================================== coef std err t P>|t| [0.025 0.975] ---------------------------------------------------------------------------------- Intercept 0.4053 0.046 8.732 0.000 0.314 0.496 gender[T.male] 0.1008 0.018 5.625 0.000 0.066 0.136 education 0.0334 0.003 9.768 0.000 0.027 0.040 ============================================================================== Omnibus: 4.675 Durbin-Watson: 1.792 Prob(Omnibus): 0.097 Jarque-Bera (JB): 4.876 Skew: -0.147 Prob(JB): 0.0873 Kurtosis: 3.365 Cond. No. 69.7 ============================================================================== Warnings: [1] Standard Errors assume that the covariance matrix of the errors is correctly specified. ''' result = sm.ols(formula='wage ~ education + gender + education * gender', data=data).fit() print(result.summary()) ''' OLS Regression Results ============================================================================== Dep. Variable: wage R-squared: 0.198 Model: OLS Adj. R-squared: 0.194 Method: Least Squares F-statistic: 43.72 Date: Tue, 09 Jun 2020 Prob (F-statistic): 2.94e-25 Time: 12:01:44 Log-Likelihood: 88.503 No. Observations: 534 AIC: -169.0 Df Residuals: 530 BIC: -151.9 Df Model: 3 Covariance Type: nonrobust ============================================================================================ coef std err t P>|t| [0.025 0.975] -------------------------------------------------------------------------------------------- Intercept 0.2998 0.072 4.173 0.000 0.159 0.441 gender[T.male] 0.2750 0.093 2.972 0.003 0.093 0.457 education 0.0415 0.005 7.647 0.000 0.031 0.052 education:gender[T.male] -0.0134 0.007 -1.919 0.056 -0.027 0.000 ============================================================================== Omnibus: 4.838 Durbin-Watson: 1.825 Prob(Omnibus): 0.089 Jarque-Bera (JB): 5.000 Skew: -0.156 Prob(JB): 0.0821 Kurtosis: 3.356 Cond. No. 194. ============================================================================== Warnings: [1] Standard Errors assume that the covariance matrix of the errors is correctly specified. ''' |

Figures

from figure_1.png to figure_13.png

Reference

No comments:

Post a Comment